End-to-end QA with AI testing tool

for a modern dev workflow

Match their output with AI agents that acts like your actual users.

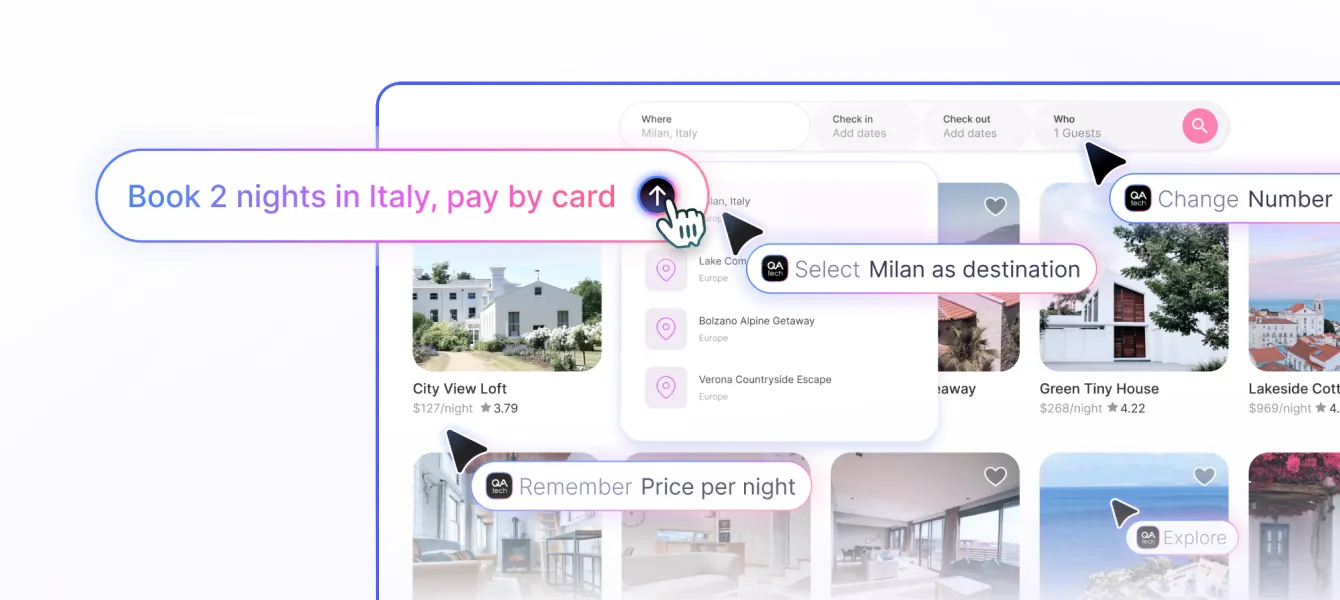

Test full user journeys, not scripted paths

Run E2E tests with AI across your product – from 3rd-party apps to email verification. Catch what’s broken and missing for your users with QA agents for shift-left testing.

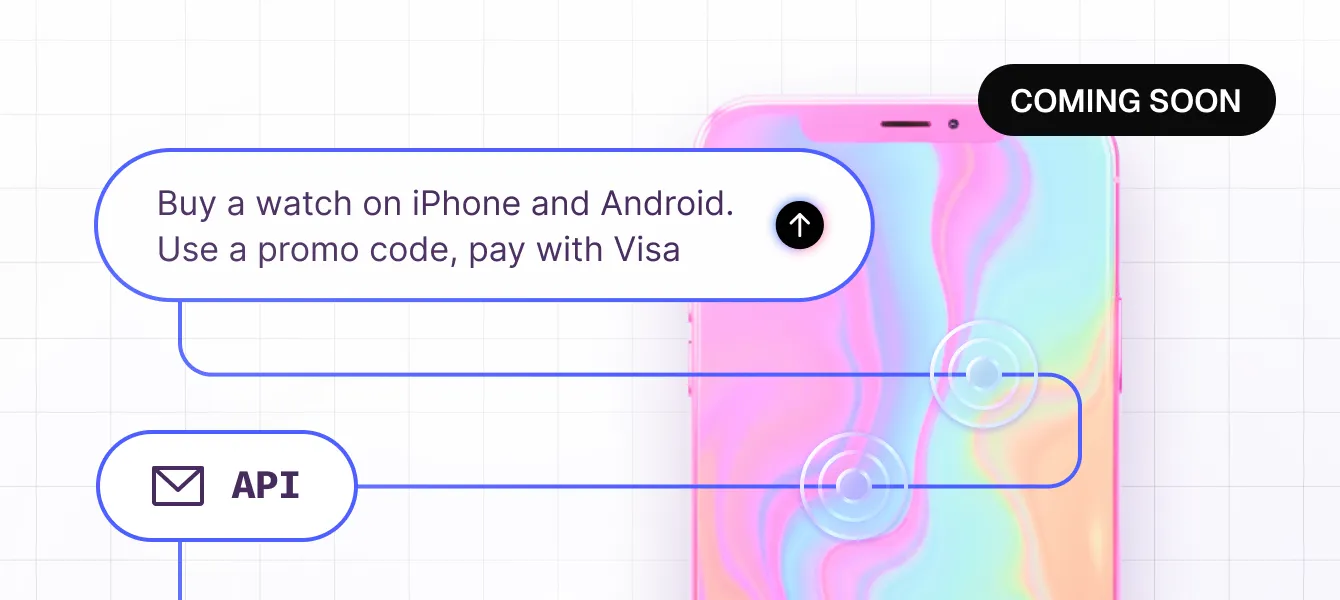

Never miss a mobile release

Catch cross-platform failures early. Test real user journeys that jump between mobile apps, web, and API calls. Learn more about mobile testing.

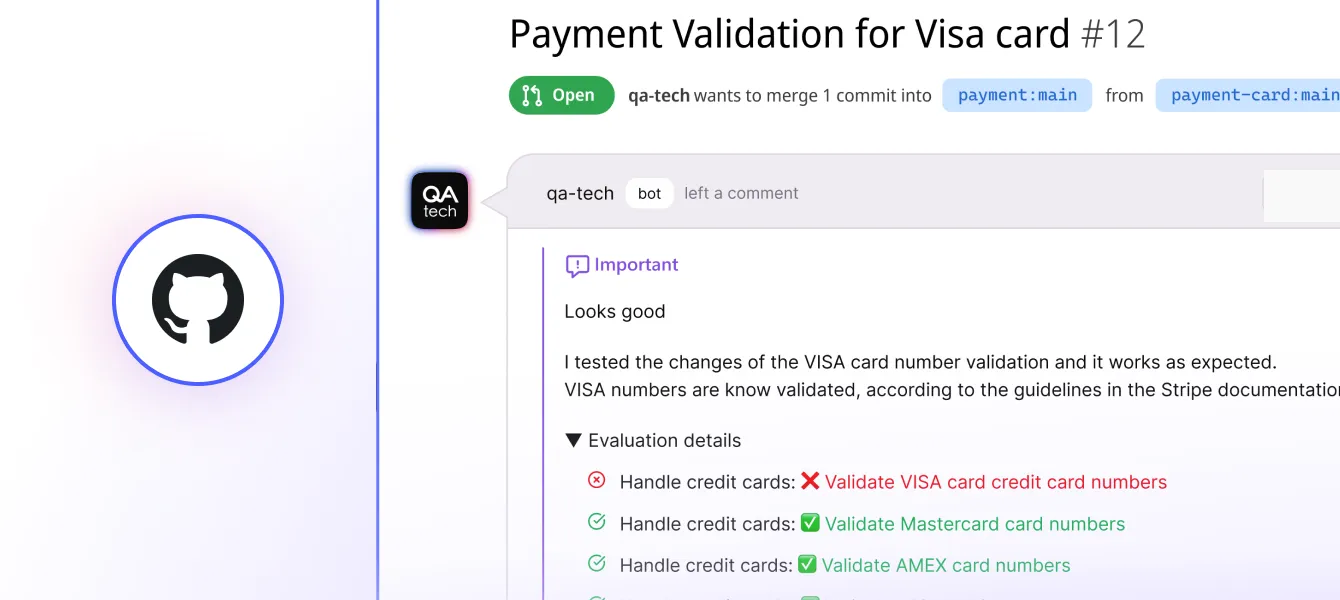

Instant feedback for your code and product

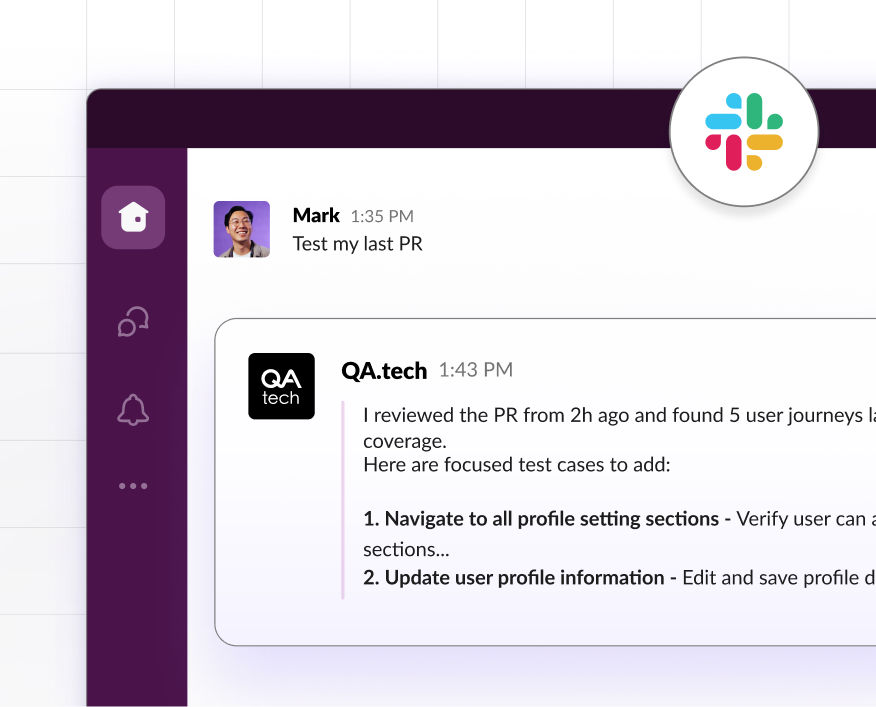

No infrastructure needed. Just point the QA.tech agent towards your environment to get PR reviews and exploratory tests up and running.

Ship on schedule without post-release surprises

Shorten the Dev <> QA feedback loop to save time in your development process. Reduce handoff and keep your team aligned with the roadmap.

More flow, less flake

Cover new cases with QA agents that explore your product like your users do.

Ask the AI what to test next

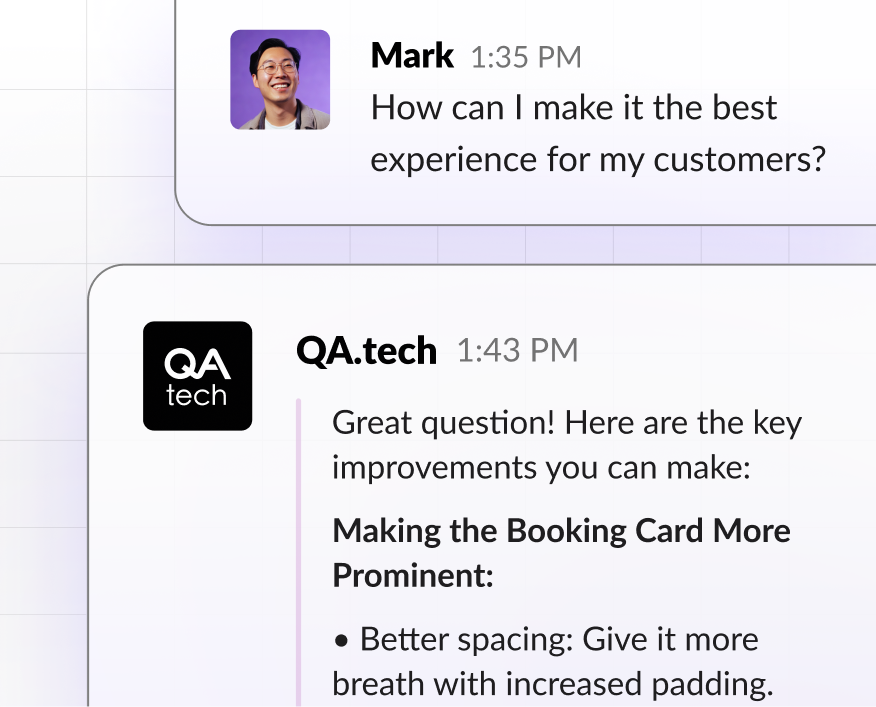

Tell the AI what you need in plain English. Get new E2E test ideas and let it run exploratory tests to increase your coverage.

Get actionable feedback

A great QA test report is more than just pass/fail status. Get actionable feedback on how to make your product better.

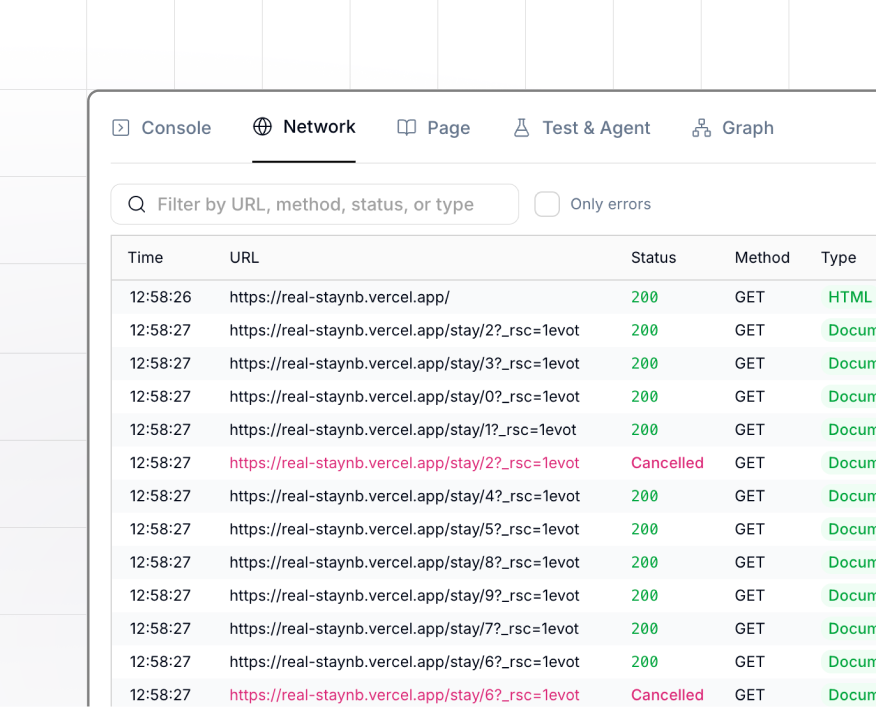

Use logs that actually help

Retrace broken paths step by step. Debug issues with console logs that show you what broke, where, and why.

Test beyond the happy path

Every user journey runs through a web of APIs, queues, and integrations your users never see – test them all with Ai agents.

Say goodbye to flaky tests

Set recurring tests for any flow. The AI runs each journey multiple times to eliminate flakiness and ensure reliable results.

Built for the tools your team loves

Get feedback in GitHub, Slack, Linear, and more. Trigger tests via CI, monitor with Prometheus, or extend custom systems via API.

Stop testing scripts.

Start testing experience.

dev team. Use AI testing tool and bring balance back to your workflow.

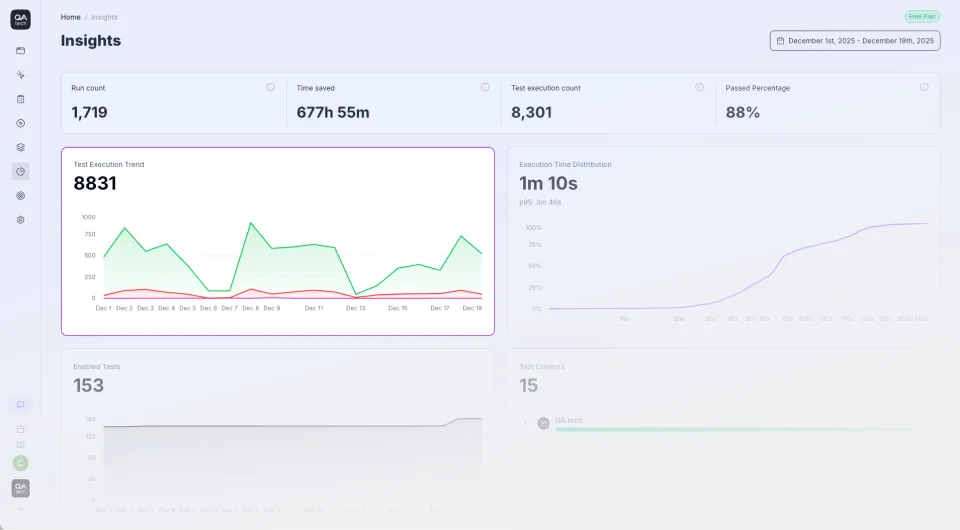

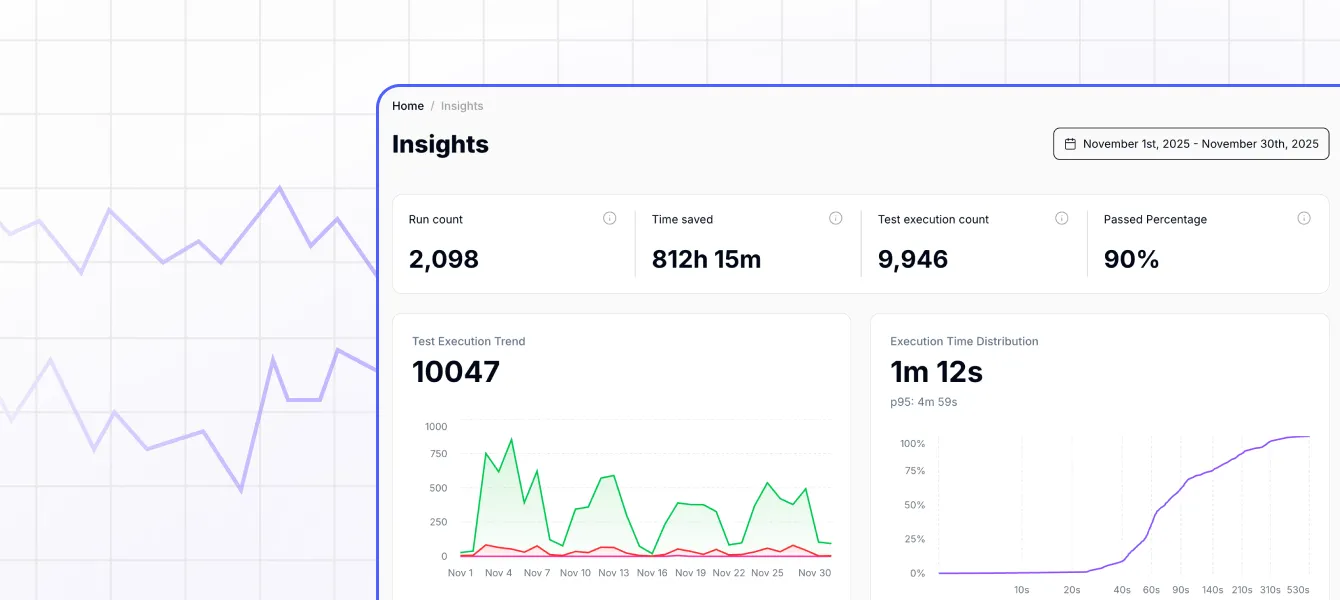

Cut weeks of QA work each quarter — and spend that time creating products your customers love.