Introduction

We’re developing an AI-based QA testing agent that evaluates websites much like a human tester would. The AI agent navigates through websites based on a set of simple guidelines, attempting to detect bugs and issues along the way.

We began development a year ago and recently decided to benchmark our current AI agent against experienced human QA testers. Ultimately to see how QA testing with AI is compares with human skills.

The Test

For our benchmark, we used a real client’s SaaS product (SHOPLAB) that had a test suite with 55 test cases. Each case was of similar complexity and length. Here’s an example of a typical test case:

Test Example:

Create a new storefront

- Category: Storefronts

- Classification: POSITIVE

- Objective: Ensure it is possible to create a new storefront.

Steps:

- Click on the ‘Markets’ link to go to the page where markets are managed.

- Click on the ‘Storefronts’ link within the ‘Markets’ section.

- Click on the ‘New Storefront’ button.

- Fill in necessary details like Market, URL, Title, and Description.

- Navigate back to the ‘Storefronts’ list to verify the new storefront is listed.

The tester’s goal is to ensure the functionality works as expected. Any bugs found must be reported with screenshots and detailed reproduction steps. All tests were performed using Google Chrome on a desktop resolution.

The AI Agent

We used our latest AI agent, internally named the RAE-agent (Reason-Act-Evaluate). It’s powered by GPT-4 for reasoning, paired with custom models for UI understanding. (For more details, see our blog post on fine-tuning MiniCPM.)

The Human QA Tester

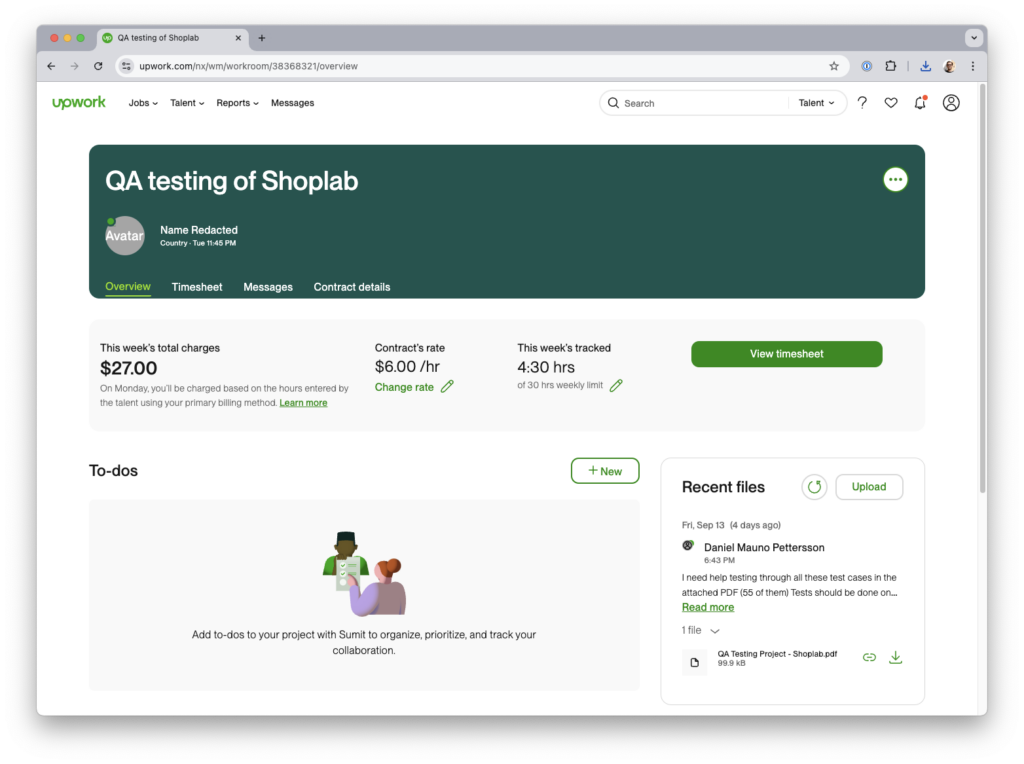

We hired a top-rated QA tester from Upwork, with a strong track record of reviews and ratings. The human tester received the following brief:

I need help testing through all these test cases in the attached PDF (55 of them)

Tests should be done on Chrome, desktop.

Report all bugs found in a document, with screenshots and steps to reproduce. Thanks

Our Expectations

We anticipated that the human tester would take slightly longer to complete the task. Because after all, a human tester must familiarize themselves with the product, handle interruptions, and take breaks. However, we also expect the human to excel in critical thinking and spot issues requiring deeper judgment.

The AI agent, on the other hand, was expected to handle simpler issues more efficiently and without the need for rest.

Results

The Stats

Both performed well – and found the same important bugs that blocked users from fulfilling the test case objectives.

Issues found refer to minor issues and non-functional requirements that were impacting the user experience but did not stop the user from completing the objective.

The human found issues such as: “The confirmation pop-up experiences a delay in the process.”, while the agent focused more on non-functional issues such as slow responding API-calls, and accessibility issues.

The Agent found one more critical issue than the Human did, the issue was on the test “Delete a Customer”, where a confirmation modal was supposed to open, but never did – making it impossible to Delete the Customer entity.

| Human | Agent | |

|---|---|---|

| Tests Completed | 55 (100%) | 55 (100% |

| Critical Bugs Found | 4 | 5 |

| Issues Found | 15 | 90 |

The Report

The Human provided the results in a Google Sheet with a description of what had been done and the issues that were found. For the tests that failed he included a link to a screenshot. Nice!

The Agent recorded a video of the whole test run and provided step-by-step instructions on how to reproduce, as well as console logs, network logs, and a list of non-functional issues found.

Time Taken

This round went to the agent – it can start right away, and works without any interruptions.

The consultant accepted our task on a Friday, so it was a bit unfair. Let’s count the time from Monday morning. On Monday he completed 15 of the 55 tests, and reported 3 hours worked. On Tuesday another 90 minutes was reported, and the task was completed, roughly 36 hours after starting the work.

Human total time: 4 hours 30 minutes billable hours, 36 hours from start to finish.

The agent completed all tests in just below 30 minutes. Knock out.

Cost

The consultant we hired was among the top rated for QA testing at Upwork, and priced at $6 per hour. Which is cheap, but in the normal range at Upwork! The total bill was $27.

QA.tech offers plans starting as low as $99 per month, which gives you up to 20 test cases.

If you want to run a one-time test suite – the human approach is still cheaper.

The Sum

For normal usage, which for SHOPLAB is 20 test runs a month (one release to be tested per working day), the hours and cost start to add up.

The Human approach would cost $540, and take 90 hours.

AI testing would get you there for $99, and take 10 hours.

Conclusion

Our benchmark shows that a significant portion of standardized website testing can be handled by AI agents. This frees up human testers to focus on more qualitative, nuanced tasks. Additionally, AI-driven QA testing opens the door for teams that previously couldn’t afford dedicated human testers, making comprehensive testing more accessible.

Contact us if you would like to learn more about what we’re building, or book a call with me if you want to start using QA.tech in your dev process today!