Understanding the Website

The foundation of our automated testing process begins with a deep understanding of the website’s structure. The first step is scanning the site and creating a comprehensive graph that represents it in an internal format. You can think of this graph as a “supercharged sitemap”—not only does it map out the various pages and links but also captures all the possible user interface (UI) states and elements. This includes hidden modals, dynamic content, and other interactive components that a basic crawler might miss.

If you’re familiar with the concept of a knowledge graph, this is similar but focused specifically on UI and functionality. We leverage this graph to understand how users interact with the site and the various actions they can perform at different points in the interface. For a more in-depth understanding of the underlying technology, you can read our piece about the knowledge graph.

Let’s use Meetup.com as a practical example. This is a platform where users can browse and attend meetups across a wide range of cities and topics. The structure and functionality are ideal for showcasing how our approach works because of its complexity—dynamic content, authentication flows, and a variety of user interactions.

Finding an Objective

Once we have the graph, the next step is to derive objectives—things the user should be able to accomplish on the site. These objectives are the foundation of test cases. In software development terms, you can think of these objectives as the “headlines” of a manual test case, or, for product managers, as user stories.

An objective is typically action-oriented, focused on what the user wants to achieve. For instance, a clear objective for Meetup.com might be:

Objective:

Sign up for a meetup happening in Stockholm next week.

This objective is actionable and reflects a real-world user intent. It’s also specific enough that we can later expand it into a full test case with concrete steps. The key here is to focus on what the user cares about and what matters from a business or functional perspective.

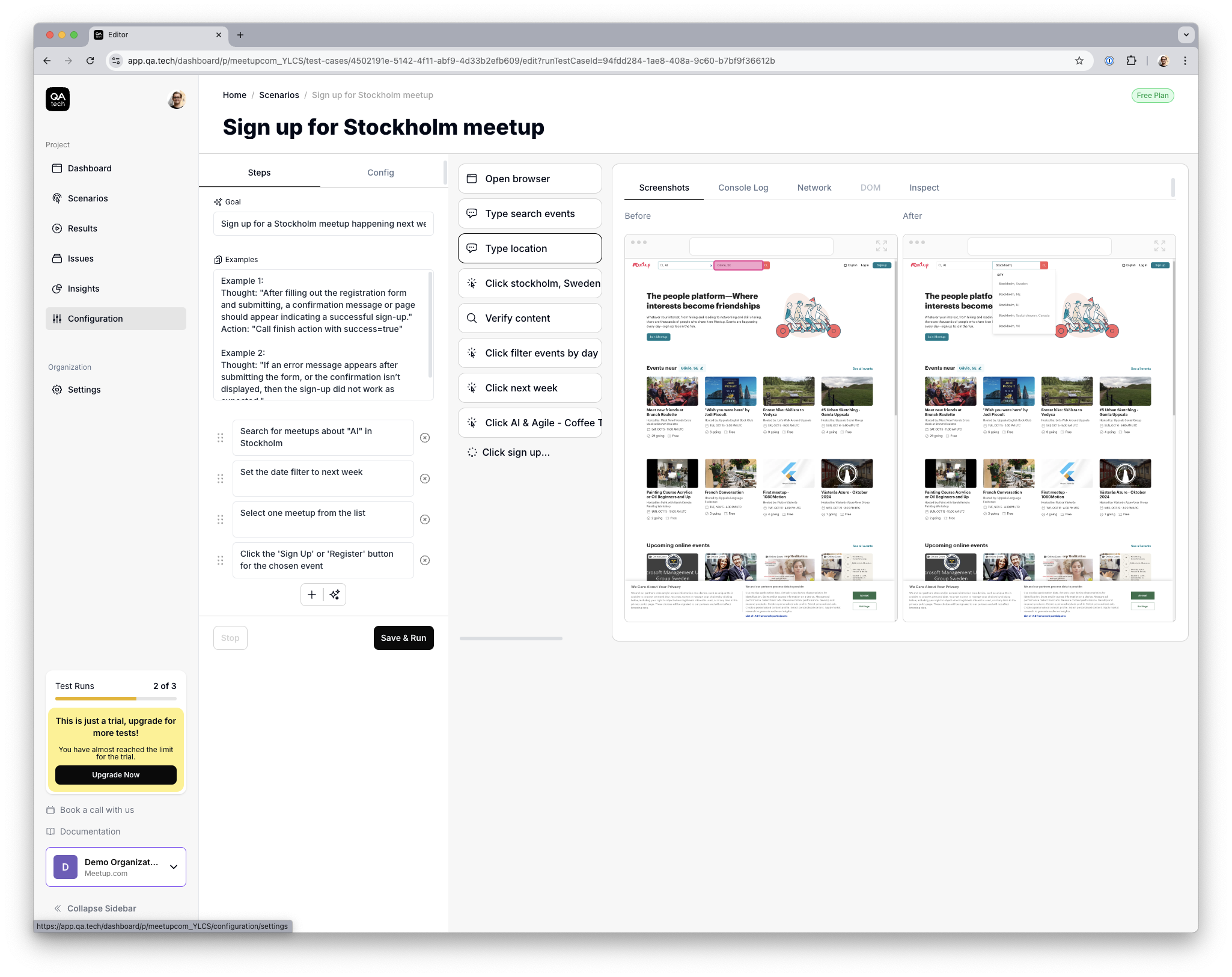

Expanding the Objective to a Test Case

Once we have the objective, we need to break it down into specific steps the user would follow to achieve it. This is where best practices and domain knowledge come into play. We generate these steps based on common user behavior patterns and interactions. Think of this as “filling in the gaps” between the objective and the actual clicks and inputs needed to perform the action.

For the Meetup.com example, the steps might look something like this:

- Navigate to the Meetup.com homepage

The user begins by landing on the homepage or by opening the site. - Search for meetups in Stockholm

The user uses the search bar or location filter to specify “Stockholm.” - Filter by date

The user applies a filter to show meetups happening next week. - Select a meetup

The user clicks on an event that interests them. - Sign up for the event

The user clicks “Join” or “RSVP” to sign up for the event.

Each step represents a user interaction that should happen seamlessly. At this stage, the test case is a hypothesis based on our understanding of the site’s structure and user behavior.

Evolution of the Test Case

Here’s where things get iterative. The first run of the test may not succeed. Maybe the sign-up button is hidden behind an authentication wall, or maybe the dynamic content loads too slowly for our test automation to catch. This is where our system shines: it tries to perform the test and reflects on the previous attempt to refine the process.

If a step fails, we analyze the failure, adapt the test case, and re-run it. The system evolves the test case, taking into account the nuances of the site, such as asynchronous content loading, different UI states, or even intermittent failures caused by third-party integrations.

For example, if the test case fails during the sign-up process because the user is required to log in first, the system adapts by incorporating a login step before trying again.

This iterative process continues until the test case performs reliably under various conditions. Once it’s stable, we consider the test case “done.”

Conclusion

By building a comprehensive graph of the website, deriving actionable objectives, and iterating on test cases, we automate complex testing scenarios with minimal human intervention. Our process is particularly well-suited for websites with dynamic content and complex user flows, like Meetup.com. Through a combination of deep site understanding, structured objectives, and evolutionary testing, we ensure that users can perform key actions reliably and efficiently.

This method not only saves developers time but also improves the reliability of automated tests, reducing the risk of undetected bugs slipping into production.

Would you like to learn more about how we can apply this to your specific website? Get in touch, and let’s discuss how we can streamline your testing process.