Understanding UIs Part II: Context and Content Matters

Introduction

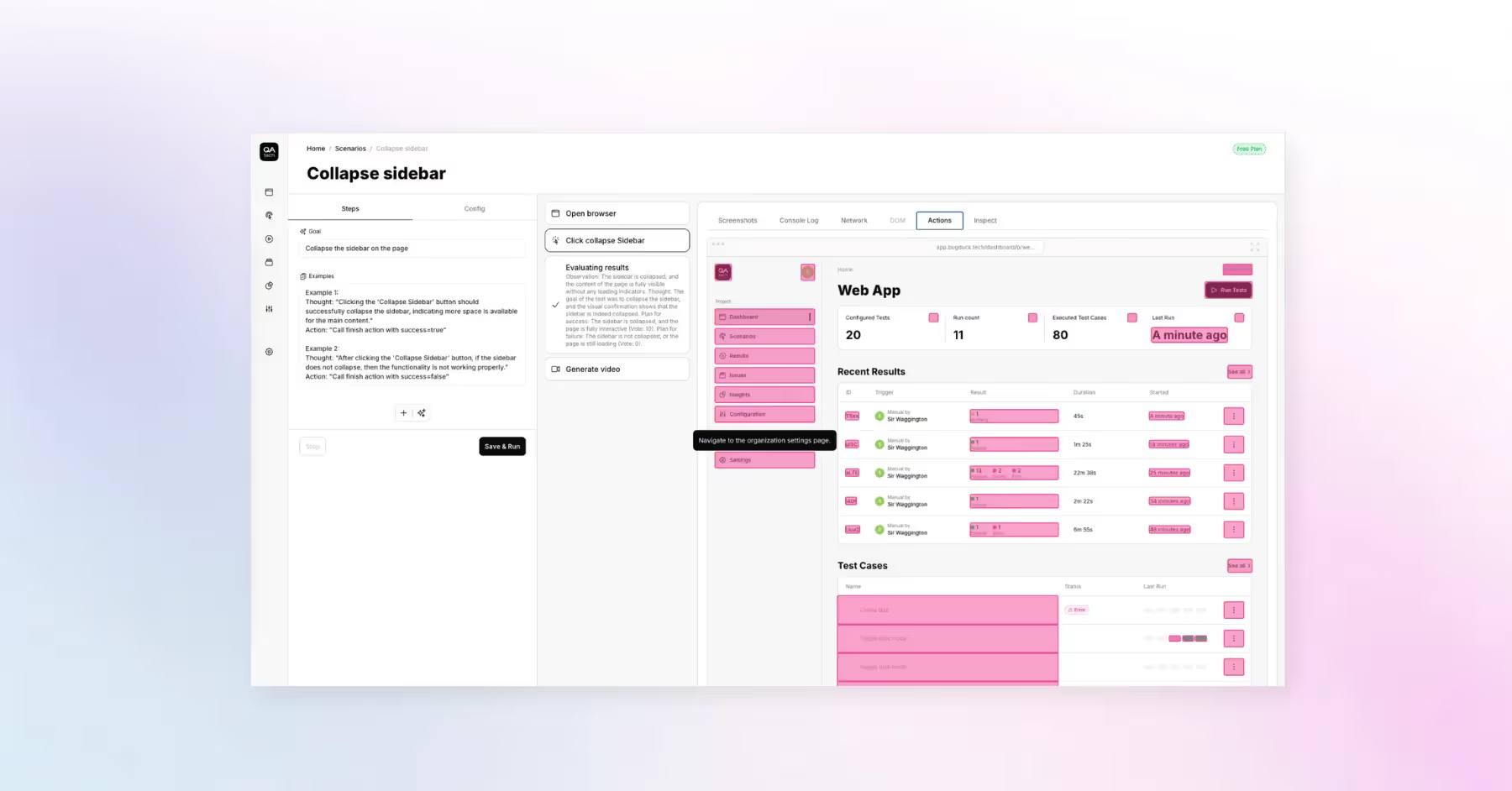

Automated end-to-end (E2E) testing is revolutionizing how development teams operate. It saves time and alleviates the monotony of manual testing. To make this automation effective, we need to train our AI models to understand user interfaces (UIs).

The Challenge: Similarity Can Be Misleading

Take a look at this listing. The UI elements repeat: each person has a similar card with a call-to-action (CTA) button. For us, identifying which button to click to book a meeting with Cyril is straightforward. However, for AI models, it’s not that simple.

In some cases, larger models can determine the correct action when given context, such as “Which button books a meeting with Cyril?” Smaller models, on the other hand, struggle. They may confuse buttons that appear similar, particularly when there are multiple options.

To make our AI-driven QA testing effective, it must consistently perform these tasks with accuracy.

Contrastive Learning: A Solution

Contrastive learning offers a promising solution to this challenge. This method enables AI to differentiate between visually similar elements that serve different functions. The core principle of contrastive learning is to train the model using pairs of data: positive examples (similar actions) and negative examples (different actions).

For instance, consider a hovered login button versus its normal state. While both serve the same function, they appear different visually. On the other hand, a “Contact” button may serve various purposes depending on its context, even if it looks identical to another button.

By feeding the model diverse examples, we enhance its ability to recognize and differentiate UI components that could otherwise be confused. We create pairs of data points, training the model to position similar action embeddings close together in the embedding space. Elements that perform the same function should be near each other, while for instance those that look alike but serve different purposes should be spaced further apart.

Training Process

The training process involves several key steps:

- Data Collection: Gather diverse UI examples that include variations of buttons, cards, and other elements. This data should encompass multiple contexts to reflect real-world usage.

- Pair Creation: Develop pairs of data points—one representing a positive example and another a negative example. This could include images of buttons in different states or similar buttons with different functions.

- Model Training: Feed these pairs into the model. The model learns to minimize the distance between similar action embeddings and maximize the distance between dissimilar actions in the embedding space.

- Validation and Testing: Once trained, validate the model against a new set of UIs to assess its performance. Monitor its ability to distinguish between buttons and other elements accurately.

- Iterative Improvement: Continuously refine the model based on testing feedback. Incorporate additional data or adjust training parameters as needed.

In-Depth Reading

For those interested in a deeper exploration, my colleague, PhD Alexandra, has written a deep dive blog post on this topic.

If you want to see how AI can enhance your site’s testing through advanced QA, feel free to contact us or book a call with me.

Stay in touch for developer articles, AI news, release notes, and behind-the-scenes stories.