Fast Features, Slow Foundations: Rethinking QA for the AI-First Organization

The Two-Speed Company

When Airtable’s CEO Howie Liu restructured his product organization, he didn’t add another layer of management. He split the company into two distinct modes of thinking.

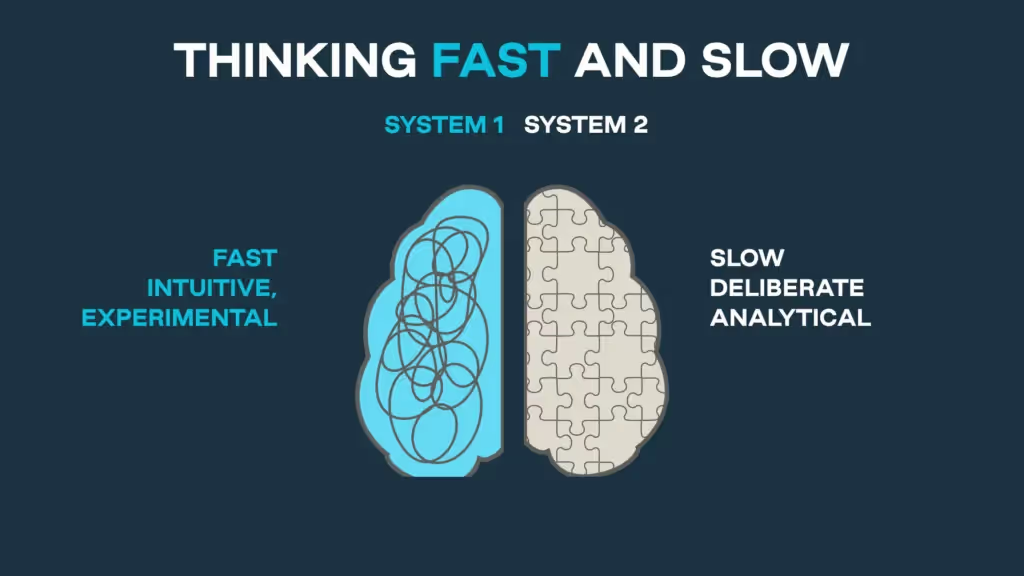

The fast thinking group ships new AI features weekly. Their mandate is urgency: prototype, release, learn, repeat. The slow thinking group works on the deep foundations: the data infrastructure and scale architecture that can’t be hacked together in a sprint. One group thrives on speed, the other on durability. Together, they allow Airtable to move like a startup without snapping under enterprise demand. The model is inspired by Daniel Kahneman’s Thinking, Fast and Slow: System 1 thinking is quick, intuitive, and experimental, while System 2 is deliberate, analytical, and built for long-term stability. Airtable translated that cognitive framework into org design.

This dual-speed model isn’t just clever org design. It’s a recognition that AI changes the clock speed of software itself. Products now evolve in weeks, not quarters. Teams that can’t operate in two gears (one sprinting, one laying track) risk burning out or breaking down.

And here’s the catch: most companies apply this thinking to product development only. They restructure design, engineering, and product management around fast vs. slow. But their QA runs at one speed — the old one. That mismatch is why even the most AI-accelerated companies still stall at the last mile: untested flows, brittle scripts, and release trains that derail under their own velocity.

The thesis of this piece is simple: if you want to operate as a true AI-native organization, you must bring the two-speed model into QA itself. Fast QA to keep pace with fast features. Slow QA to protect the rails beneath them. Without both, speed is just acceleration into failure.

The Rise of the Fast Thinking Org

AI coding tools have done something remarkable: they’ve collapsed the distance between an idea and its first working version. With Claude Code, GitHub Copilot, Cursor, and similar assistants, engineers can spin up prototypes in hours. At Airtable, this acceleration led to a structural change: a dedicated fast thinking group tasked with shipping new AI capabilities on a near-weekly cadence.

This isn’t just speed for its own sake. As Howie Liu puts it, CEOs themselves must become individual contributors again — playing with the tools, prototyping, and “tasting the soup” rather than delegating innovation. Prototypes replace decks. Interactive demos replace memos. Culture shifts toward experimentation over planning.

The payoff is obvious: product velocity that matches the pace of AI’s own evolution. In Howie’s words, “every software product has to be refounded,” because each model release introduces entirely new capabilities. Companies that adapt fast capture the upside. Those that don’t become laggards overnight.

But there’s a shadow side. When one part of the system accelerates, others show strain. Vilhelm von Ehrenheim, QA.tech’s Chief AI Officer, calls this the theory of constraints in action: boost coding speed, and QA immediately becomes the bottleneck. Manual testers can’t keep up with weekly feature drops. Scripted tests rot faster than they’re written. The result: “fast thinking” teams outpace their own safety nets.

The lesson is clear: building a fast lane for development only works if you build one for quality as well.

The Hidden Bottleneck: QA at One Speed

Speeding up one part of a system doesn’t increase throughput if everything else runs at yesterday’s pace. That’s the essence of the theory of constraints, and it’s exactly what’s happening in software development today.

AI-boosted engineers can now double their commit velocity. But QA hasn’t evolved in step. Manual testers still rely on checklists and clicks. Scripted suites, meant to provide safety nets, become brittle “shadow codebases” that break whenever the product changes. Instead of protecting velocity, they consume it.

Vilhelm von Ehrenheim, QA.tech’s Chief AI Officer, frames the problem bluntly:

“As developers accelerate, QA is the new bottleneck. You can ship code faster, but you can’t ship safe code faster with 20th-century QA.”

The cost shows up in hard numbers. Teams lose 3.2 developer days per sprint just maintaining scripts. Detection lags stretch to 14 days, meaning bugs escape into production while revenue quietly leaks away. One QA.tech customer logged 11,000 user flows in a single month, an impossible feat for human testers or scripted frameworks.

This is the paradox of the AI era: development moves at light speed, while QA crawls. Companies celebrate how fast they can code, ignoring the fact that untested flows are silently eroding trust, deals, and revenue. The “release train” looks impressive, right up until it derails.

Applying Fast and Slow to QA

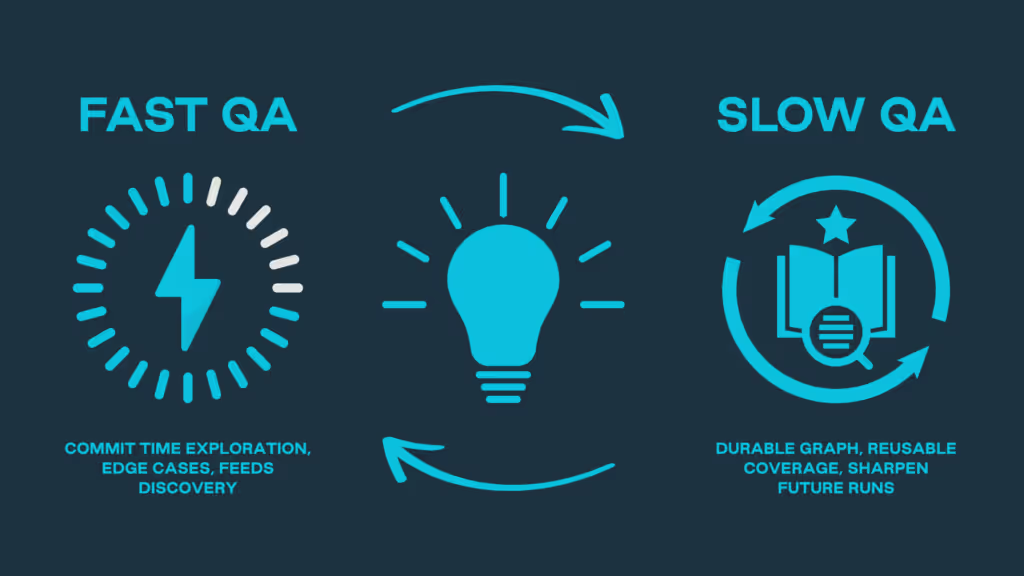

If product teams now operate in two speeds (sprinting for features, planning for infrastructure), QA must mirror that model. Otherwise, the system tears itself apart.

Fast QA is about keeping pace with feature velocity. Here, AI agents act like power users on caffeine: clicking, scrolling, and breaking things the moment code lands. They don’t wait for a manual script; they generate their own flows. In Vilhelm von Ehrenheim’s words:

This turns QA into a living map of the product rather than a brittle checklist. Fast QA means commit-time validation, where bugs are caught in hours, not weeks.

Slow QA is about durability. Instead of chasing every UI tweak, it builds the graph-based backbone that maps features, dependencies, and flows across the application. This context makes testing scalable. It’s the difference between a one-off bug report and a system that understands how a payment page, login flow, and database all interact. In QA.tech’s architecture, every action and page rolls up into features, and features roll up into the project itself.

The combination is powerful. Fast QA surfaces the unexpected edge cases: the “unknown unknowns.” Slow QA encodes them into durable coverage so they never slip through again. One creates momentum; the other creates resilience. Together, they give companies what Airtable’s org design gave its product: speed without fragility.

The Complementary Loop

The genius of Airtable’s reorg wasn’t just carving the company into two camps. It was the loop between them. Fast thinking teams shipped features that drew attention and adoption. Slow thinking teams built the scaffolding that turned that attention into durable growth. One created sparks, the other carried the fire.

QA needs the same loop.

When autonomous agents explore new features at commit-time, they often trip over edge cases no human would script. Vilhelm von Ehrenheim calls this the AI superpower:

“Humans tend to find expected bugs. AI finds the unexpected ones.”

A fast QA layer brings those discoveries to the surface within hours — before they hit production. But those insights only matter if they feed into something more permanent. That’s where slow QA comes in. The graph architecture captures what the agent found — the flows, dependencies, and breakpoints — and encodes them into reusable coverage. The next time a login flow or checkout step changes, the system already knows where to look.

It’s a flywheel: fast QA catches surprises, slow QA makes them predictable. Together, they convert chaos into learning. Without that loop, fast QA alone would be noise. Slow QA alone would be paralysis. Combined, they give organizations the same balance Howie achieved at Airtable: urgency with staying power.

What Leaders Must Do

Engineering leaders are quick to copy the Airtable playbook for product orgs: carve out fast and slow teams, encourage prototypes, celebrate pace. But when it comes to QA, most still run a single-speed model. They either rely on armies of manual testers, or they outsource trust to scripted frameworks that break faster than they’re fixed. That gap is exactly where release trains derail.

The fix isn’t to hire more QA engineers. As Vilhelm von Ehrenheim puts it:

“If code is doubling in speed, more humans can’t plug the gap. QA has to scale like code does — with agents.”

So what should leaders actually do? Three practical moves:

- Designate a fast QA lane. Wire autonomous agents directly into CI/CD so every PR triggers commit-time exploration. Treat it as the experimental “System 1” layer — fast, intuitive, and constantly learning.

- Fund a slow QA backbone. Support a graph-based system that accumulates context: features, flows, dependencies. This is the deliberate “System 2” layer — durable knowledge that turns one-off failures into reusable coverage.

- Set cultural expectations. Make it clear that quality belongs in the same loop as development. No merges on red checks, and no “throw it over the wall” QA cycles. Fast lanes feed discoveries into the slow lane; the slow lane keeps future fast runs sharper.

Leaders who build these two lanes side by side aren’t just protecting velocity — they’re creating an operating model where QA scales like code.

Forward Look: The AI-Native SDLC

What does this look like a few years out? By 2027, the AI-native software company won’t distinguish between “development” and “testing” as separate stages. Every commit will trigger an autonomous QA cycle — fast agents exploring flows instantly, slow graphs absorbing context and expanding coverage with each change. That’s also the direction QA.tech is building toward: merging QA and development into a single phase where tests run in lockstep with code, not as something bolted on later in the CI/CD pipeline.

The result: a living, self-updating safety net that grows with the product. Developers won’t waste 3.2 days per sprint maintaining brittle test suites. Bugs won’t linger for 14 days before detection. The lag between “we shipped it” and “we know it works” collapses to near-zero.

Vilhelm von Ehrenheim frames it not as a cost center but as a revenue engine:

“When QA keeps pace with code, you’re not just avoiding bugs — you’re protecting deals, transactions, and trust.”

In other words, quality becomes the invisible infrastructure of growth.

The metaphor we opened with still holds. Fast thinking generates momentum; slow thinking ensures durability. Apply that to QA, and you don’t just prevent derailments — you lay down new track as the train speeds ahead.

Companies that embrace this dual-speed QA model will find themselves moving faster and safer. Those that don’t will continue celebrating velocity, only to discover too late that their rails were never built for the speed they demanded.

Stay in touch for developer articles, AI news, release notes, and behind-the-scenes stories.