Solving Annoying Testing Problems: Playwright vs. AI-Driven Testing

I’ve been there, and you’ve probably been there too: everything seems great with your tests, then suddenly, a UI change breaks everything. It feels like perpetually building on top of sand. One more damn test breaks and your trust issues will start affecting your relationships.

Elements hidden inside iframes, unexpected popups, and interactive maps turn routine testing into a tedious nightmare.

While tools like Playwright have significantly improved automated testing, they often require careful scripting, frequent updates, and still struggle with certain UI complexities.

In this article, we’ll explore how traditional scripted automation with Playwright tackles some notoriously challenging UI elements, then contrast it with an AI-driven testing approach.

By the end of the article you should have a clear picture of when you should stick with traditional automation and when to consider picking up AI for the job.

Handling Complex UI Elements with Playwright

Scenario 1: Dynamic Sign-Up Forms

Testing sign-up forms with Playwright reveals several timing and validation challenges. The test environment includes a form with real-time validation, unpredictable email availability checks, and random submission failures.

I deployed the test app to GitHub Pages and the code is openly available here.

// Fill form with real-time validation

await page.fill('#fullName', 'John Doe');

await page.fill('#email', 'john@example.com');

await page.fill('#password', 'SecurePass123!');

await page.fill('#confirmPassword', 'SecurePass123!');

// Wait for async email validation - unpredictable timing (500ms - 2.5s)

await page.waitForTimeout(3000); // Hope it's enough time

// Handle dynamic password strength indicator

await expect(page.locator('.strength-bar.strong')).toBeVisible();

// Submit with variable loading states

await toggleCheckbox(page, 'agreeTerms', true);

await page.click('.submit-btn');

await expect(page.locator('.btn-text')).toHaveText('Creating Account...');

// Form submission has random failures (30% chance) with 1.5-4.5s delay

try {

await expect(page.locator('.success-state')).toBeVisible({ timeout: 8000 });

} catch (error) {

// Handle random failure and retry logic

if (await page.locator('.error-state').isVisible()) {

await page.click('.retry-btn');

await page.click('.submit-btn');

}

}

The form includes several unpredictable elements:

- Email availability check: 30% chance any email is “already registered” with random 500ms-2.5s delay

- Password strength indicator: Updates in real-time as user types

- Form submission: 30% failure rate with random 1.5-4.5s processing time

- Validation timing: Each field validates on blur with different timing

This script assumes validation timing is consistent, password strength indicators update predictably, and loading durations don’t vary — all challenges that make tests brittle in real applications.

Scenario 2: Embedded Google Maps

Interacting with embedded maps often involves navigating layers of DOM elements:

const mapFrame = page.frameLocator('iframe[src*="google.com/maps"]');

await mapFrame.locator('button[aria-label="Zoom in"]').click();

The test environment includes a real Google Maps iframe integration that loads the Space Needle in Seattle. Maintaining such selectors can become tedious as Google Maps frequently updates its DOM structure.

Scenario 3: Unpredictable Popups

Handling unpredictable popups can be tricky, especially if they don’t consistently appear:

// Popup appears randomly within 20 seconds during form interaction

const popup = page.locator('.popup-modal');

if (await popup.isVisible()) {

await popup.locator('text=Maybe Later').click();

}

The implementation includes a popup that appears randomly within 20 seconds during form interaction, with two different button states (“Maybe Later” or “Claim Offer”). Even with defensive coding, flaky tests will creep in, causing frustrating and unnecessary test maintenance.

Simplifying Testing with AI-Driven QA

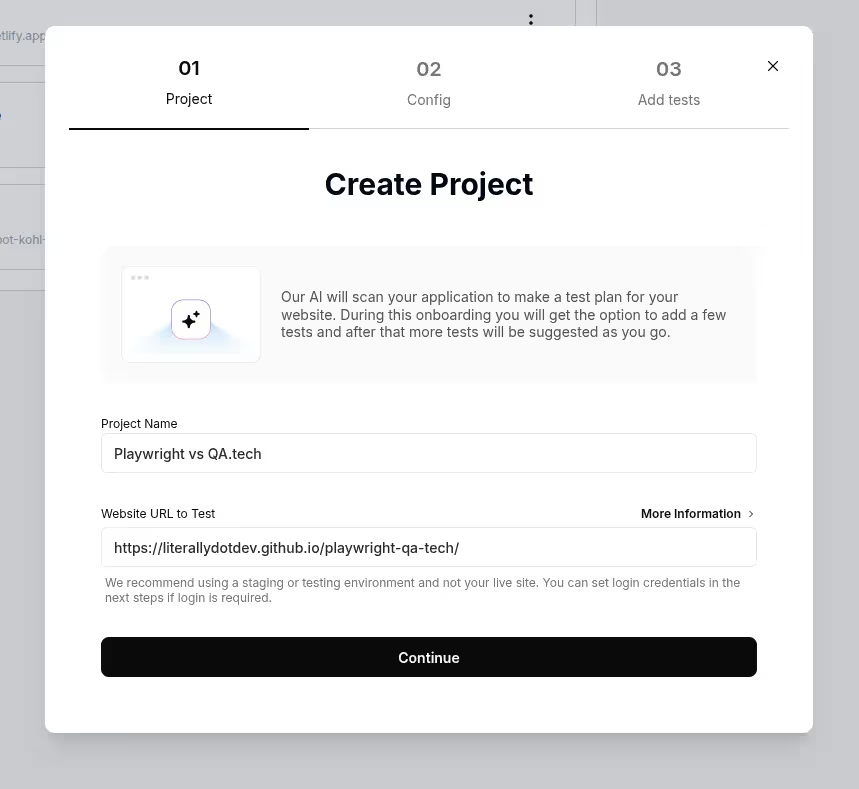

QA.tech takes a fundamentally different approach by learning your application and automatically adapting tests.

You can get started by simply creating a project and linking your application to it.

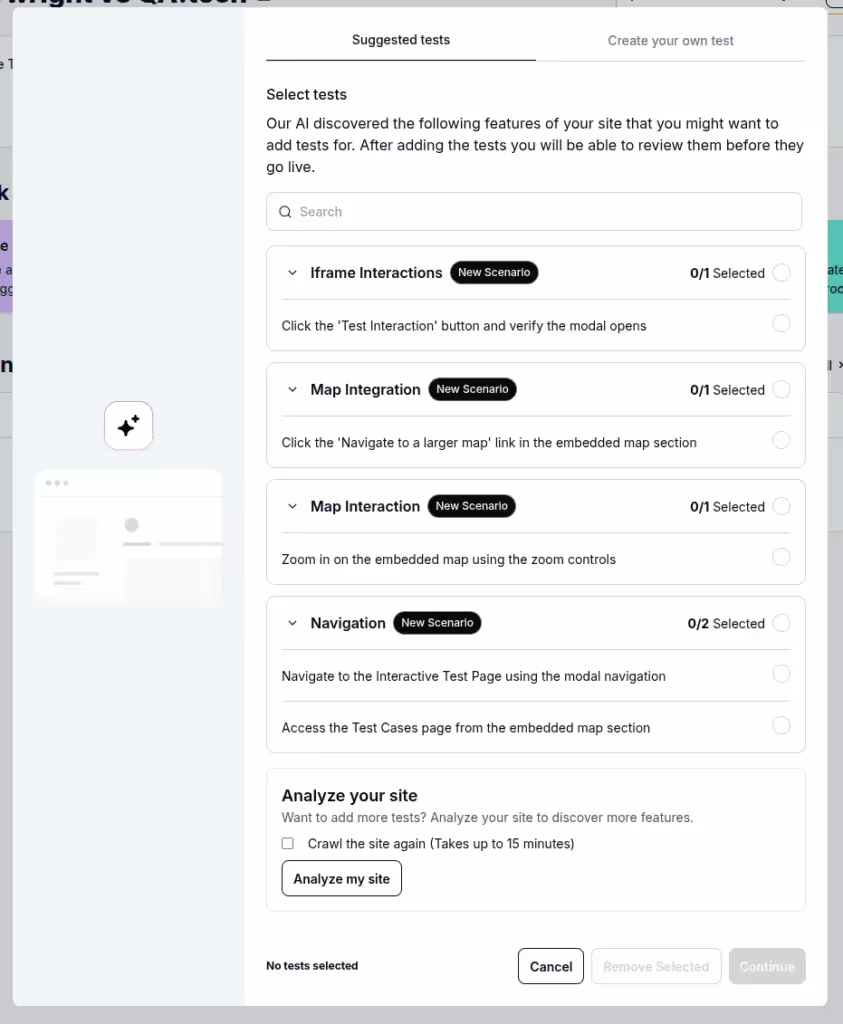

To create tests, navigate to the Test Cases menu and press Add Test Case. Here you can also choose to Analyze your site.

Once the AI finishes analyzing your site, it proposes a list of test cases.

This will get you up and running quickly, however, the power of automated QA is that you can define your own tests in text form.

Scenario 1: Dynamic Sign-Up Forms

Instead of writing brittle scripts with hardcoded timeouts, QA.tech identifies the form automatically and reacts to its states — even as your app evolves.

It handles:

- Email validation timing: Adapts to the 500ms-2.5s random delays

- Password strength indicators: Recognizes real-time UI feedback changes

- Random submission failures: Handles 30% failure rate gracefully with automatic retry logic

- Form state changes: Adapts to success/error states without hardcoded expectations

![url_upload_68d53a5a12e31.gif [optimize output image]](https://cdn.prod.website-files.com/68b6ea19ac0a61cd114aa104/68d53aa34c9d0860eacd6e31_ezgif-5155fa36857ce0.gif)

Scenario 2: Embedded Google Maps

QA.tech intelligently adapts to frequent DOM changes in embedded third-party apps like Google Maps, significantly reducing the time you spend maintaining frail selectors.

The test demonstrates the AI agent clicking the “Zoom-In” button within the embedded iframe to zoom in on the Space Needle.

![url_upload_68d539d46a6d0.gif [optimize output image]](https://cdn.prod.website-files.com/68b6ea19ac0a61cd114aa104/68d539dddf45ca66ecbdc0ab_ezgif-56f768bdea0eb6.gif)

Scenario 3: Unexpected Popups

AI-driven tests anticipate and handle popups seamlessly, reducing flakiness and improving reliability without defensive coding.

As you can see in the embedded Google Maps demo above, the AI agent dismisses the “Special Offer” popup to continue it’s objective.

The Honest Conversation About AI Testing

I know the gut reaction: “AI testing? Really? Another AI tool that promises to solve all my problems?”

I’ve been burned by overhyped tech solutions before too. So let’s have an honest conversation about what AI testing actually delivers versus what it promises.

The Reality Check

When I first heard about AI-driven testing, my immediate reaction was skepticism. How could AI possibly understand our custom components, our weird edge cases, our business logic that took months to build?

Here’s what I learned: it can’t. Not completely.

AI testing isn’t going to magically understand your complex financial calculations or your custom drag and drop component with seventeen different states. But here’s what it is incredibly good at: handling the tedious, repetitive stuff that eats up 80% of your testing time.

Think about it. How much of your day is spent updating selectors because the frontend team changed a class name? Or adding waits because the API got slightly slower? Or handling popup variations that Marketing keeps A/B testing? That is where a bit of AI goes a long way.

What Actually Happens When You Try It

The first time you watch an AI agent navigate your application, it’s genuinely weird. It doesn’t follow your test scripts — it just… figures things out. Like watching someone else use your app for the first time, except they adapt in real-time instead of getting confused.

Mistakes Happen

Yes, AI can make mistakes. So can your Playwright scripts. The difference is in how they fail. Traditional tests break when the UI changes; AI tests adapt to UI changes but might miss subtle business logic errors.

The key insight: Use both strategically. Let AI handle the navigation, form filling, and UI interactions. Keep your critical business logic tests in traditional scripts where you need precise control.

The Economics Make Sense

If you’re saving 2-300 hours per quarter after implementing AI testing, even if you’re paying a premium for the AI tool, how much is 300 hours of developer time worth? The answer is: a lot more.

Starting Small Is Smart

You don’t need to revolutionize your entire testing strategy overnight. Pick one workflow that breaks your tests constantly, maybe it’s your checkout process, or your user onboarding, or that admin dashboard that gets updated every sprint.

Let AI handle that one workflow for a month. See how it performs. Then decide if you want to expand or stick with traditional approaches for everything else.

Real-World Impact

Companies using AI driven QA report significant improvements in testing efficiency:

- Pricer’s transformation: Saved 390 hours per quarter while reducing their QA team from 8 to 2 engineers

- Strise’s KYC compliance testing: Saved 256 hours while executing 3,622 test cases across complex KYC workflows

These aren’t marketing fluff, they’re real teams solving real problems with AI-driven testing.

The Verdict: Choose the Right Tool for the Job

Playwright and traditional scripted automation remain valuable, however, AI-driven testing solutions like QA.tech offer compelling benefits for testing dynamic and complex UI elements. Less scripting, lower maintenance, and more reliable tests are possible by adopting AI powered QA.

Ready to simplify your testing?

Try QA.tech for free.

Stay in touch for developer articles, AI news, release notes, and behind-the-scenes stories.