When AI Speeds Up Development, QA Can’t Stay in the Slow Lane

AI coding tools have put development on fast-forward, but most teams have left their quality assurance on pause.

Cursor, GitHub Copilot, Claude Code, and a wave of AI-assisted development platforms have supercharged engineering velocity. Entire features ship in hours instead of weeks. But faster development doesn’t mean better software.

In fact, for many teams, it means the opposite: a higher volume of code pushed to production without a corresponding increase in quality assurance. And that gap isn’t just a technical inconvenience. It’s a business risk, a retention killer, and a silent revenue leak.

The conventional wisdom says AI can write code but can’t test it, like a chef who bakes the cake but can’t taste it. We’re here to prove that wrong. Over the next few sections, we’ll explore:

- How AI-powered QA can match AI development pace

- Why relying on traditional QA is a bottleneck in 2025

- And what future-ready teams are doing to ensure speed doesn’t come at the expense of trust, stability, and growth.

The New Velocity Paradigm in Software Development

In 2025, 62% of engineering leaders say AI coding tools have increased the volume of post-merge defects, and most still test the old way.

That’s the paradox of AI-assisted development. On one hand, AI coding tools are now nearly universal, used by 90% of engineering teams to accelerate feature delivery, write boilerplate faster, and unblock developers. On the other, they’re introducing quality issues that slip past existing safeguards, creating a visible spike in defects after code is merged.

Every commit, no matter how small, can introduce regression risks. With AI tools in the mix, those commits happen more often than ever. The result: faster feature creation, but more downstream cleanup.

AI-generated code often requires extra human oversight and correction for logic errors, misaligned styles, and subtle integration issues. But while development workflows have evolved to incorporate these tools, QA hasn’t kept pace.

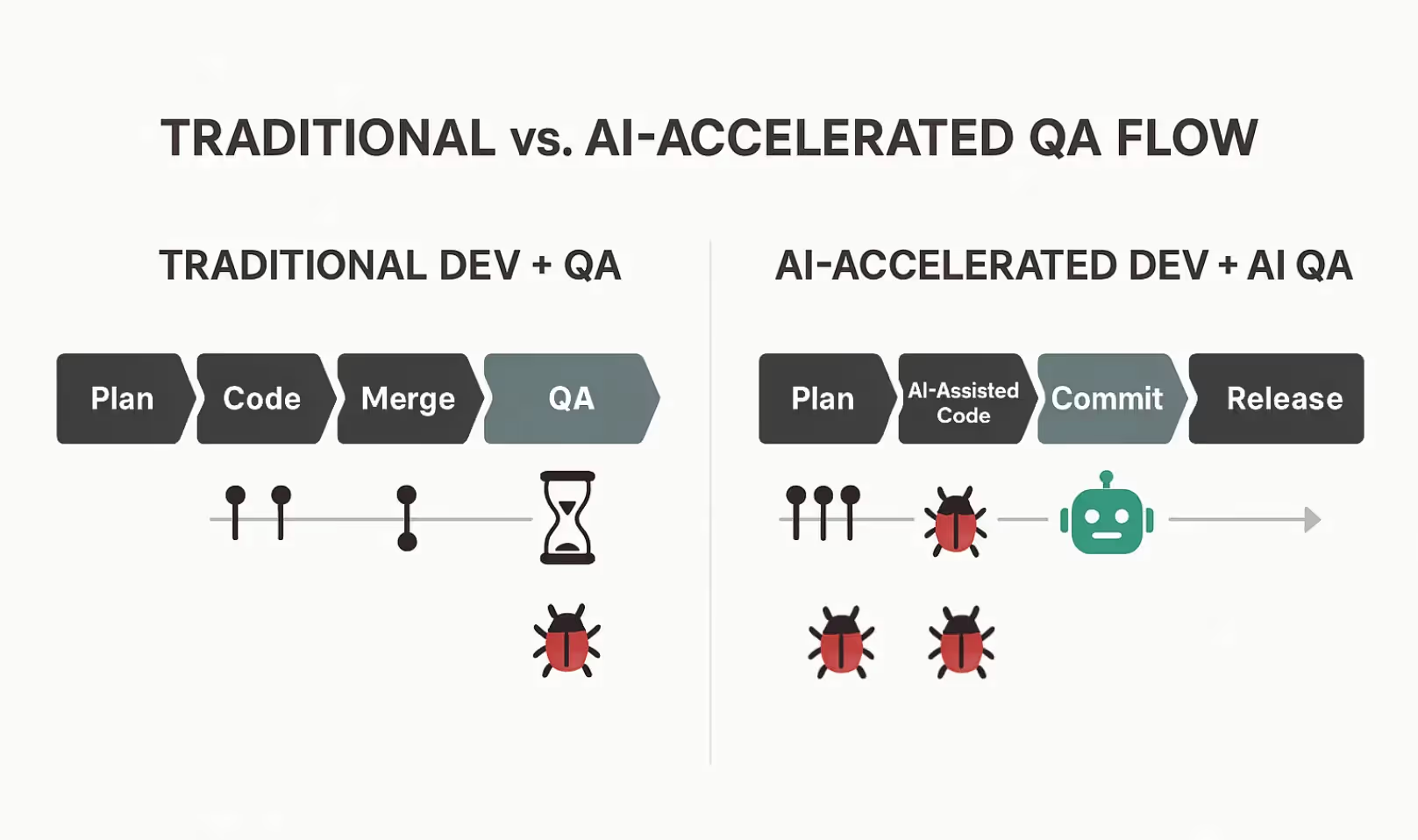

Many teams still rely on traditional testing approaches: running large regression suites after merges, manual exploratory testing, and scheduled QA sprints. These processes were designed for slower, more predictable release cadences, not for the continuous influx of changes AI enables.

This mismatch creates a quality gap. Increased speed and volume in code delivery, combined with static QA processes, means more defects escape earlier detection and reach later stages of testing and production. The cost is measured not only in rework, but also in customer trust, retention, and brand credibility.

“The faster your coders go, the faster your testing bottlenecks become revenue risks,” says Vilhelm von Ehrenheim, Co-Founder & Chief AI Officer at QA.tech

So where does that leave teams in 2025? If AI accelerates development, QA must accelerate too. But how? Can AI itself play a role in closing the quality gap? Or is that asking the fox to guard the henhouse? Are there trustworthy solutions that can truly keep up with AI-speed development? And what’s the real cost of ignoring QA until it’s too late? These are the questions we’ll explore next, starting with the new reality of QA in the Age of AI.

The Bottleneck Has Moved: QA in the Age of AI

In manufacturing theory, the Theory of Constraints teaches that a system’s output is limited by its slowest stage (the bottleneck). Improve that bottleneck, and the constraint will shift elsewhere. In software development, the bottleneck used to be coding itself. That’s no longer a safe assumption.

Recent research has shown AI’s effect on development productivity is far from uniform. In some contexts, AI coding tools accelerate delivery by 20% or more. In others, they slow seasoned developers down due to the extra time needed to review and debug AI-generated output. But regardless of whether AI speeds up or stalls the writing of code, one effect is consistent: it changes the volume, cadence, and complexity of code reaching the rest of the pipeline.

And that’s where the constraint has moved. QA, testing, and release validation (stages once comfortably buffered from the chaos of development) are now absorbing more changes at a faster pace, without equivalent process or tooling upgrades.

In many teams, traditional QA cycles and regression testing suites are still operating on schedules built for a pre-AI cadence. The result? Defects slip through earlier gates, bug discovery shifts later into the cycle, and release confidence erodes.

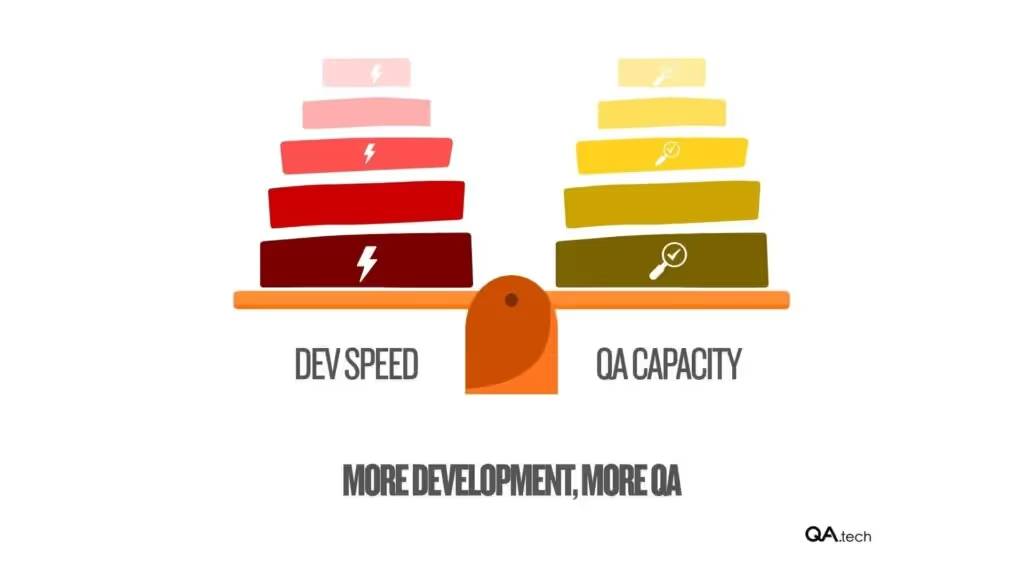

“If you 10x your dev output, you’d better 10x your QA. AI gives you the superpower to do both.” — Vilhelm von Ehrenheim

Catching bugs is only part of the challenge; the real test is keeping quality aligned with the pace of ambition. Without evolving QA to match AI-driven development velocity, organizations risk trading short-term speed for long-term instability, technical debt, and customer attrition.

The good news? The same AI revolution that shifted the bottleneck can also help remove it if QA becomes as autonomous, adaptive, and continuous as the development it supports. That’s where we turn next.

Why Speed Without Quality Hurts the Business

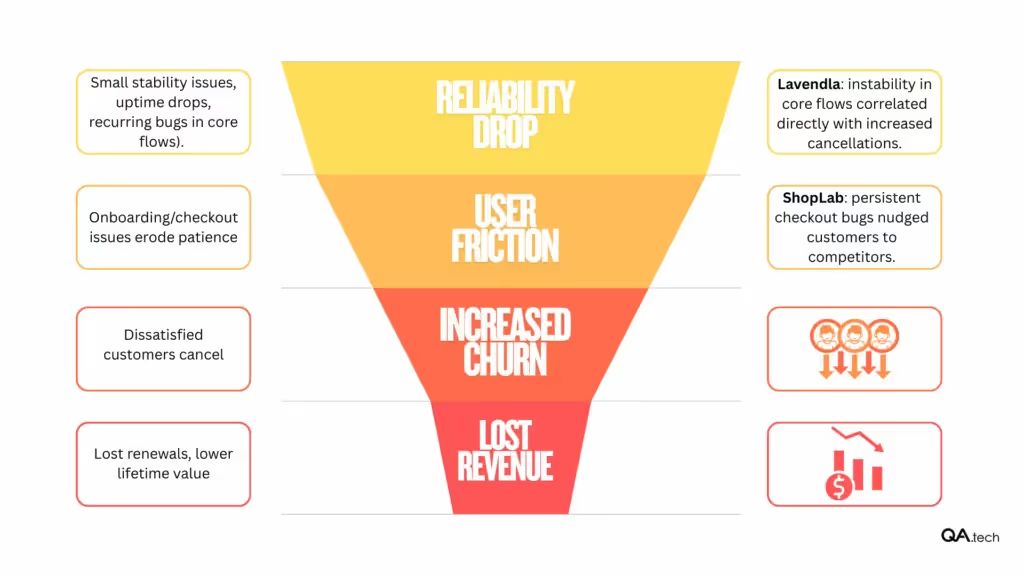

In B2B SaaS, reliability is a growth lever. According to the 2025 Recurly Churn Report, the average annual churn rate for B2B SaaS companies is 3.5%. Voluntary churn is often tied to product reliability, onboarding, and support quality, accounting for 2.6%. While those numbers may look small, the report stresses that even modest increases in churn can have an outsized impact on revenue growth. This applies especially in subscription businesses where retention is the primary driver of net revenue retention (NRR).

We’ve seen this play out in real teams. Lavendla, a customer-facing SaaS platform, discovered that instability in core user flows didn’t just cause short-term frustration. It correlated directly with subscription cancellations in the following quarter.

ShopLab faced a similar reality: minor but persistent checkout bugs were enough to nudge paying customers toward competitors with smoother experiences. In both cases, the lost revenue wasn’t limited to immediate churn. It meant losing upsell opportunities, reducing lifetime value, and handing market share to rivals.

This risk is even greater for companies trying to break into the market. When a prospective customer chooses a lesser-known solution over an established competitor, their tolerance for friction is low.

A buggy onboarding experience or a broken core workflow doesn’t just frustrate them. It undermines their decision to choose the challenger in the first place. That first bad impression sends them straight back to the market leaders, where perceived stability outweighs innovation or price.

The damage goes beyond retention. Product instability erodes brand trust, especially in competitive categories with low switching costs. Dissatisfied customers don’t leave quietly. They post reviews, share experiences, and make it harder to win new business. Support teams absorb the flood of bug-related tickets, while engineering diverts resources from innovation to firefighting.

Quality is not an abstract virtue; it’s a measurable factor in growth or decline. And when speed is prioritized without equal investment in stability, the result is predictable: customer attrition, competitive loss, brand erosion, and rising operational costs.

The question is: if these outcomes are so costly, why do so many teams let preventable issues escape into production? The answer lies in a deeper problem: the methods we’ve been using to safeguard quality are still operating on timelines and assumptions from a slower era. These business consequences aren’t random. They’re the direct result of quality processes still operating on timelines and assumptions from a slower era of software delivery.

Why Traditional QA Can’t Keep Up

Traditional QA models, built around manual testing cycles, brittle automated scripts, and post-merge validation, were designed for a slower, more predictable development cadence. When AI coding tools increase the volume and frequency of commits, a QA process that still depends on long regression runs or manual exploratory passes quickly becomes the bottleneck.

By the time issues surface, they’ve already made their way deep into the release pipeline, or worse, into production. In some cases, releases are blocked altogether while teams scramble to resolve last-minute defects, halting delivery and incurring costly delays.

For established players with deep customer loyalty, a defect might be a bruise. For emerging companies trying to win market share, it can be fatal. These challengers are asking customers to take a risk on a new name. If that first experience is marred by bugs, broken flows, or degraded performance, the customer’s conclusion is swift: go back to the safer, more established option.

Traditional QA’s slower pace all but guarantees those risks are higher. Especially in the early months when impressions are being formed. The structural weaknesses are easy to spot:

- Manual bottlenecks: Reliance on human testers for repetitive checks slows cycles and adds inconsistency.

- Script fragility: Automated scripts often break with UI or flow changes, requiring constant maintenance that steals time from actual testing.

- Delayed validation: Quality gates placed late in the pipeline allow defects to propagate before they’re caught.

These weaknesses aren’t going away on their own. The more AI accelerates development, the more glaring it becomes. Which is why forward-looking teams are starting to ask: if AI can accelerate coding, can it also accelerate QA without compromising trust in the results?

The Case for AI-Driven QA

If AI has changed the speed and volume of development, the only way QA can keep up is to evolve in kind. This doesn’t mean replacing human testers entirely. It means giving them a system that can match the pace, coverage, and adaptability of AI-accelerated coding.

AI-driven QA agents work differently from traditional automation. Instead of relying solely on pre-scripted test cases that need constant maintenance, they can:

- Learn the application dynamically, mapping user flows and building an internal “mental model” of the product (an evolving map of how features, screens, and flows connect) without being told exactly where to click.

- Continuously explore and validate, running checks on revenue-critical paths, integrations, and UI elements at commit-time, not days later.

- Perform self-heal tests, adapting to UI or flow changes automatically (without requiring a human to rewrite the test script), reducing the maintenance burden that slows traditional automation.

This shift changes QA from a reactive gatekeeper to a proactive partner in the development cycle. Issues can be detected and triaged within minutes of a code change, when they’re cheaper and faster to fix, rather than waiting days or weeks for them to propagate into production.

The benefits go beyond speed:

- Broader coverage: AI agents can explore edge cases and user journeys that scripted tests often miss.

- Higher release confidence: Continuous validation means defects are less likely to surprise you after a deploy.

- Reduced bottlenecks: QA scales with development velocity instead of constraining it.

This is a challenge for every software company. For growth-stage challengers, winning customers from entrenched competitors depends on delivering a flawless first experience. A single buggy onboarding or broken feature can drive prospects straight back to the safe bet.

For established brands, the stakes are different but just as high. Years of trust and market dominance can be eroded by a few high-profile failures. This can open the door for rivals to gain a foothold. AI-driven QA reduces these risks across the board by making quality assurance as fast, flexible, and relentless as modern development.

“The future isn’t fewer bugs. It’s finding them before customers do.” — Vilhelm von Ehrenheim

Of course, adopting AI-driven QA raises its questions: Can we trust it? How does it fit into existing pipelines? How much human oversight do we still need? These are exactly the challenges forward-thinking teams are working through, and where hybrid models combining AI with human judgment are proving the most effective.

AI as Force Multiplier, Not Replacement

When teams hear “AI-driven QA,” skepticism often follows. Can we trust the same class of technology that generates the code also to verify it? Won’t we just be automating mistakes faster?

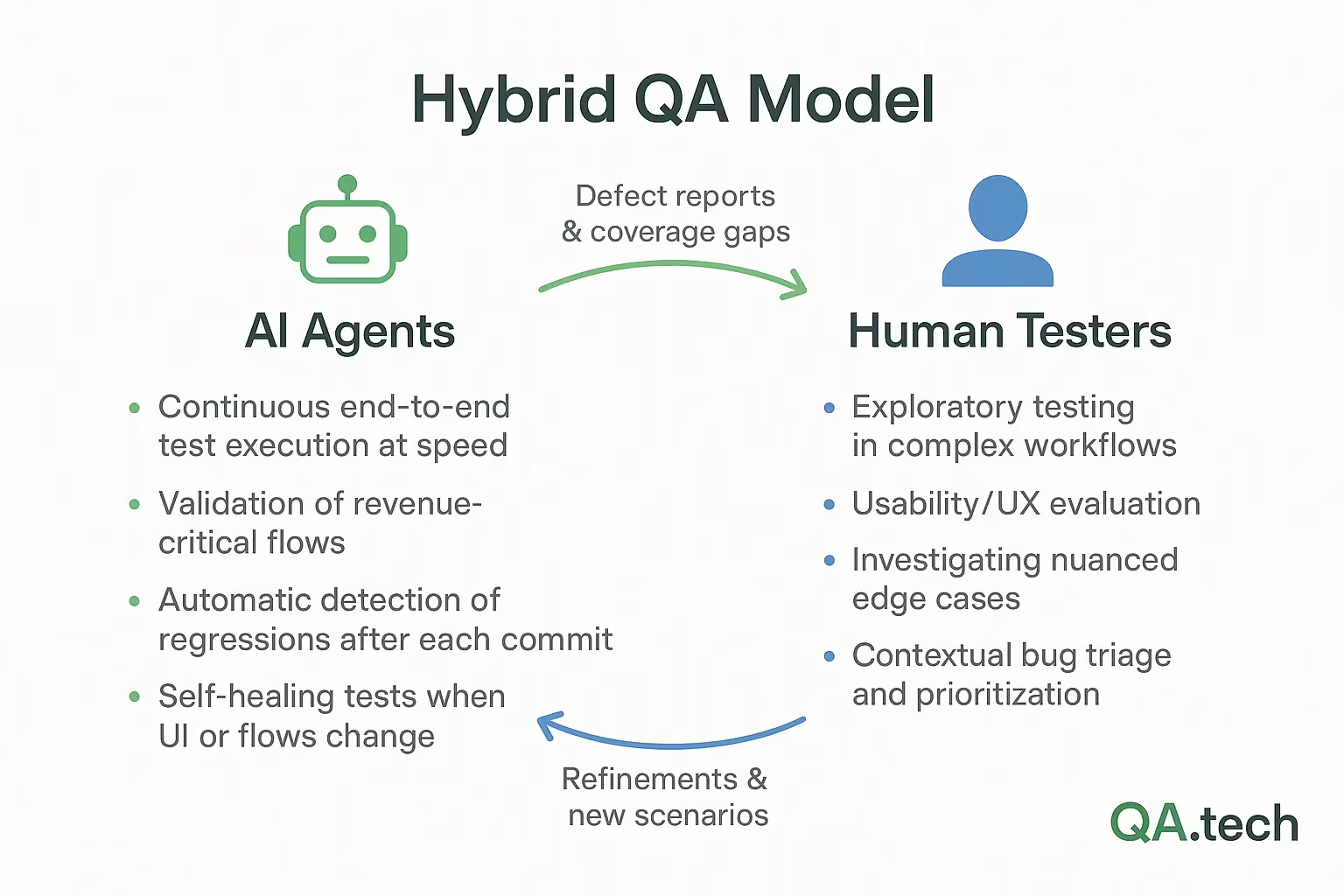

The reality is that AI in QA is not about replacing humans. It’s about augmenting them. AI-assisted coding tools save developers’ time from repetitive boilerplate so they can focus on architecture and problem-solving. AI QA agents handle the repetitive, high-volume test execution that would bog down human testers. This frees people to do what machines can’t: apply context, evaluate usability, and investigate the subtle edge cases that require judgment, creativity, and domain expertise.

In practice, the most effective teams are adopting hybrid QA models where:

- AI agents continuously scan, test, and validate flows at speed, catching obvious and recurring defects.

- Human testers focus on exploratory testing, complex workflows, and evaluating user experience and visual quality.

- Feedback loops ensure that findings from human testing feed back into the AI agents’ models, improving their coverage and relevance over time.

This hybrid approach also builds trust. Humans remain in the loop for final validation, ensuring AI is a powerful assistant, not an unchecked gatekeeper. Over time, as the AI proves its reliability, teams can scale its role in the pipeline without surrendering oversight.

“AI won’t replace engineers. But it will replace engineers who don’t use AI.” — Vilhelm von Ehrenheim

The payoff is significant. A development organization with effective AI-assisted QA can release faster without inviting chaos, scale testing coverage without proportional increases in headcount, and reduce the “bug surprise” moments that undermine both morale and customer trust.

By reframing AI QA as a force multiplier rather than a replacement, we remove the fear of lost control and focus on the real goal: creating a QA process that’s fast, adaptive, and as committed to quality as the team itself. But for this hybrid approach to deliver consistently, we need to embed it in a QA infrastructure that can match the speed, scale, and rhythm of AI-accelerated development.

Scaling QA Infrastructure with Dev Velocity

Matching QA speed to AI-accelerated development depends on rethinking the entire quality infrastructure so it scales in lockstep with engineering output. That means QA needs to be continuous, integrated, and capable of handling the same rapid-fire cadence that AI-assisted developers create.

In 2025, 90% of engineering teams report using AI coding tools to speed delivery, but most have not made equivalent upgrades to their QA processes. According to recent industry surveys, around 16% have adopted AI-assisted testing or continuous validation, creating a structural mismatch that fuels the increase in post-merge defects reported by 62% of engineering leaders.

If teams don’t close this gap, downstream quality failures will offset every gain in development speed

Here’s how to keep QA moving at AI speed — without burning out your team or budget

- Integrate into CI/CD pipelines: AI-driven QA agents should trigger automatically at commit-time and during pull requests, validating critical flows before code even hits staging. This “shift left” approach reduce the cost of fixing defects by up to 90%, according to multiple independent software quality economics studies, a figure based on industry-wide research, not QA.tech’s own data.

- Prioritize revenue-critical flows: Not all tests have equal business impact. Start by ensuring the AI agent continuously validates the workflows tied to onboarding, transactions, and core feature adoption. This protects customer retention, a critical factor in B2B SaaS, where the average churn rate is just 3.5% and even small increases can have significant revenue impacts.

- Leverage cloud-based parallelization: Running tests in parallel across scalable infrastructure ensures coverage doesn’t slow down releases. Case studies show that parallel execution can cut validation cycles from hours to minutes, making it feasible to run comprehensive end-to-end checks multiple times per day.

- Automate triage and reporting: Defect detection is only half the battle. AI agents can also classify and prioritize bugs, integrate with ticketing systems, and surface only the most urgent issues to developers, reducing noise and increasing responsiveness.

For growth-stage companies, this kind of scalable QA infrastructure allows them to ship fast without eroding trust, giving them a competitive edge against entrenched incumbents. For established brands, it means protecting hard-won reputations while still benefiting from AI-driven development speed.

“Manual QA doesn’t scale with AI-boosted coding. That’s where everything breaks.” — Vilhelm von Ehrenheim

By embedding quality assurance directly into the flow of work and scaling it alongside engineering output, teams turn QA from a gate that slows releases into a driver of delivery speed and confidence. As we’ll see next, building this capability is as much about shifting mindsets and team culture as it is about adopting new tools.

The Human Side — CTO and Developer Concerns

Adopting AI-driven QA is both a technical and a cultural shift. For CTOs and engineering leaders, the decision involves more than proving out the technology. It means earning buy-in from teams who may be wary of changing established workflows or skeptical about trusting AI with a critical part of the release process.

For many developers, the concern is deeply personal: Will this take away part of my role? Can AI really understand my product well enough to test it effectively? Recent surveys show that trust in AI-generated output is still remarkably low. The 2025 Stack Overflow Developer Survey, which polled over 49,000 developers, found that while 84% use or plan to use AI tools, only about 33% trust the accuracy of AI output, and just 3% express “high trust.” Nearly half (46%) actively distrust AI-generated results, with most developers, particularly experienced ones, verifying AI output through human review.

That skepticism deepens when the task is complex or business-critical, as with AI-driven testing. For QA adoption, this means the technology must first prove its reliability before it earns a permanent place in the release process.

CTOs face their own pressure points. For growth-stage companies, the fear is that bugs slipping through will undermine hard-won credibility before the brand is established. Larger, established organizations face a different risk: one high-profile outage or broken feature can spark public scrutiny and erode years of trust.

Regardless of size, leaders must balance the promise of speed and efficiency with the responsibility to protect the business from instability.

Building confidence in AI QA often starts small. Successful adopters run pilots on high-value, high-risk user flows, keeping humans in the loop for validation. Over time, as the AI proves its ability to catch issues early and integrate seamlessly with developer workflows, resistance fades and adoption accelerates.

The key is positioning AI not as a replacement, but as a safeguard — one that helps teams ship faster and sleep better at night.

Practical Adoption Playbook

Transitioning from traditional QA to AI-driven QA doesn’t happen overnight. It requires a mindset shift and a process designed to scale with development velocity. But with the right partner, it’s far from daunting. At [QA.tech](http://QA.techhttps://qa.tech/), teams aren’t left to figure it out alone. Clients can run a demo, see meaningful results almost immediately, and follow a proven adoption path backed by hands-on guidance.

Companies like Pricer and Telgea show how quickly the change can happen.

- Pricer cut 390 hours of QA time per quarter, and reducing production bugs.

- Telgea scaled global testing coverage, shortened QA cycles, and freed developers to focus on innovation instead of manual QA maintenance.

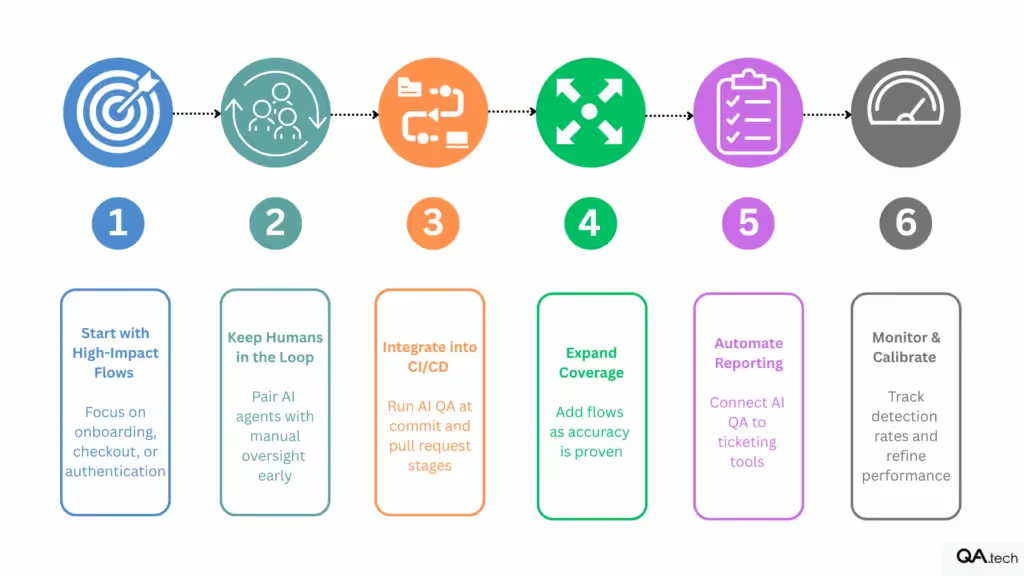

A proven roadmap for adopting AI QA effectively:

- Start with high-impact, high-risk flows Focus first on the workflows where failure is most expensive: onboarding, checkout, authentication. Early wins in these areas deliver immediate business value.

- Keep humans in the loop Pair AI test agents with manual oversight during the pilot phase. This builds trust, surfaces measurable accuracy metrics, and creates a shared confidence in results.

- Integrate into existing CI/CD Embed AI-driven testing at commit and pull request stages, not just after code merges. According to DevOps Research and Assessment (DORA) studies and related industry research, this “shift left” approach consistently reduces the number of defects that escape into production by around 50% or more compared to relying solely on post-merge testing. Early, continuous automated testing catches issues when they’re cheaper and faster to fix, improves deployment success rates, and prevents costly bugs from reaching customers. QA.tech’s AI agents make this possible at scale by running autonomous end-to-end tests that complement developer-written unit and integration tests — ensuring critical user flows are validated automatically with every code change.

- Expand coverage gradually Scale beyond the pilot areas once reliability is proven. Use test analytics to target gaps and guide AI exploration into less-covered user flows.

- Automate reporting and triage Connect AI QA to ticketing tools like Jira or Linear to classify and prioritize bugs automatically. This streamlines developer workload and speeds up fixes.

- Monitor and calibrate Track detection rates, false positives, and fix times to refine AI test behavior. Continuous feedback keeps results accurate and relevant as the product evolves.

“The goal isn’t to replace your QA team. It’s to make them faster, sharper, and impossible to outpace.” — Vilhelm von Ehrenheim

Want to see the numbers for your own team?

Use our Revenue at Risk (RAR) Calculator to estimate how much AI-driven QA could save you in time, cost, and defect reduction.

By starting with business-critical flows, maintaining human oversight, and leaning on guided adoption from a proven partner, companies can see immediate ROI and build a QA capability that grows alongside AI-accelerated development.

Looking Ahead — The AI-Driven SDLC

The shift to AI-driven software development is already underway. And it’s accelerating. The adoption curve for AI-assisted coding will soon reach the point where it’s no longer a competitive advantage, but the baseline, which is already happening with AI in general.

In this environment, teams that still treat QA as an afterthought will find themselves drowning in technical debt, losing customers, and struggling to keep pace.

In the near term, and certainly by the middle of this decade, QA will no longer be a separate phase. AI-powered testing agents will run continuously alongside coding, integrated into every stage of the pipeline.

The “seesaw” between speed and quality will be balanced dynamically, with QA capacity scaling instantly to match development velocity. T-shaped professionals (engineers with both coding and quality skills) will thrive in smaller, more efficient teams, supported by AI that handles the repetitive, high-volume work of test execution and validation.

The role of QA will be reframed. No longer just “bug catchers,” quality teams will be revenue guardians, ensuring that every release sustains customer trust and competitive edge.

“The future belongs to teams that treat quality as a growth engine, not a safety net.” — Vilhelm von Ehrenheim

This is the trajectory QA.tech is already enabling for its clients, closing the gap between AI-speed development and AI-speed quality assurance. The companies that embrace this shift now won’t just adapt to the AI-driven SDLC; they’ll be the ones defining it.

Stay in touch for developer articles, AI news, release notes, and behind-the-scenes stories.