AI Dev Tool Stack for 2026

Gone are the days when development tools were straightforward.

The workflow was simple, built around predictable, manual tools. You’d open an editor, write code line by line, and push it to the GitHub repository, hoping everything works.

In 2026, though, development tools have evolved into AI-driven systems that understand your source code, work on code contexts and changes, generate test cases almost like a human tester, and even help guide deployments.

However, faster code generation doesn’t automatically mean faster shipping. Tools like Cursor and Copilot can help you write code quickly, but what really matters is whether it will actually work when users interact with it. You still need to verify everything, and more code written means more places for bugs to hide.

In my opinion, the companies that manage to automate the entire development lifecycle, from the very first line of code all the way to production monitoring, will be the ones that come out ahead in 2026.

What Teams Are Investing in

Companies are now shifting the conversation from “Which AI tool should be used for coding?” to “Which agent should own our testing process?” Teams are investing more in AI agents that can manage their workflow autonomously and ramp up development speed.

I’m seeing engineering teams put more emphasis on agentic development, often starting with the pilot phase. Instead of hiring another QA engineer or adding more manual testers to the team, they are investing in AI agents that can do their work autonomously and consistently.

Full-stack AI approaches are now becoming the competitive standard. Take QA as an example: companies that only automate coding but still manually review and test are outpaced by those that integrate automated AI agents in their pipeline.

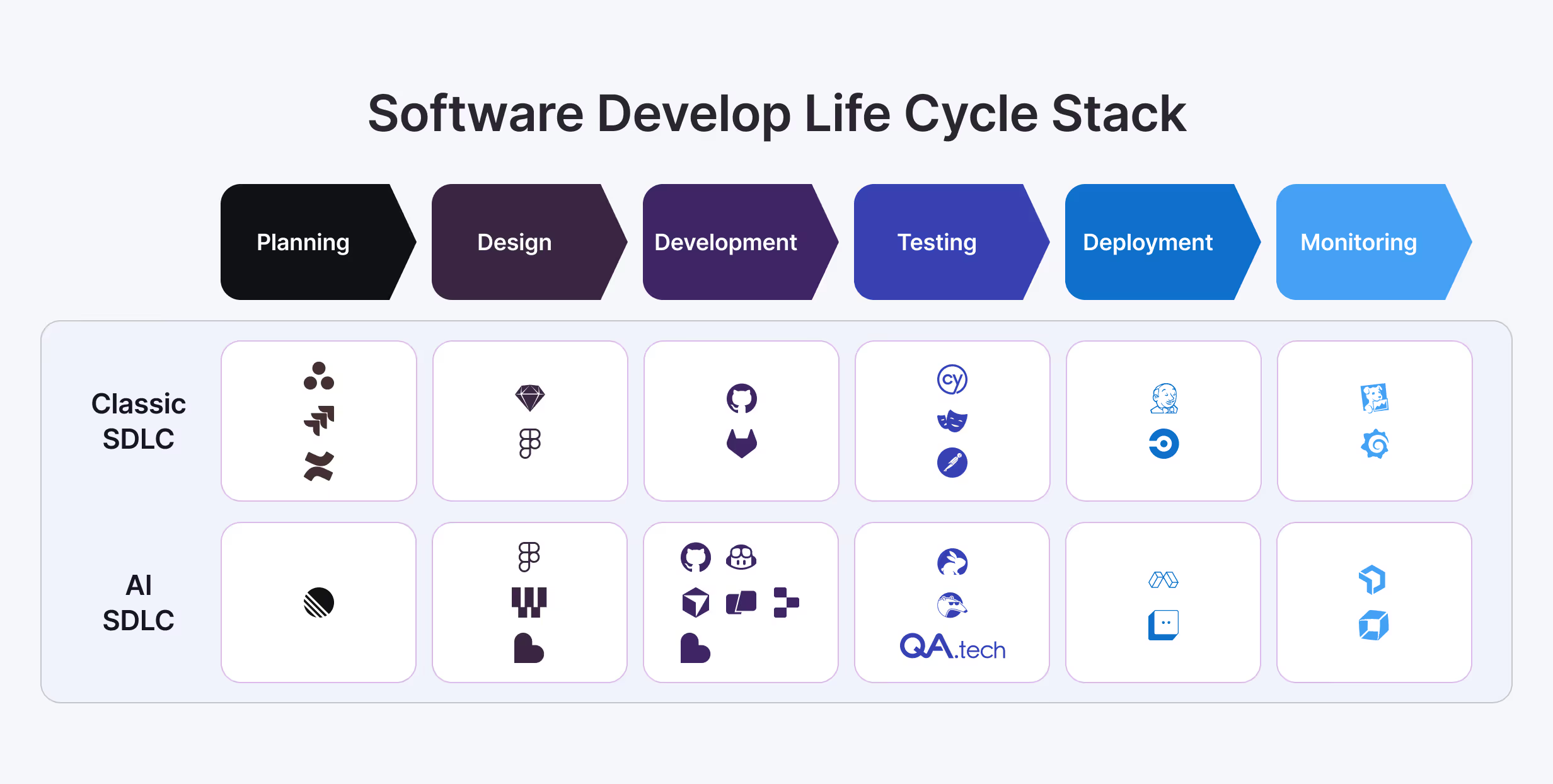

What the Development Tools Stack Looks Like in 2026

Daniel Petterson, CEO of QA.tech, has laid out his vision on the future of SDLC, where testing goes far beyond writing scripts. He has explained how agentic AI will be able to create tests automatically, run on every PR, understand contexts, and provide feedback for human review. This future is closer than you may think.

Here are some types of tools you can already count on:

Code Generation Tools

Coding tools are the foundation for building applications. They are now far ahead of simple IDEs or autocompletes. These tools fall into multiple categories, such as:

- Chat-based: They are passive and only answer to your queries when asked. Most context-aware coding tools have an integrated chat capability. Some examples of this are ChatGPT, Claude Code, and Copilot.

- Context-aware: Tools like Cursor, Windsurf, and Context7 understand your entire codebase, regardless of how large it is. As expected, they can complete the code line you’re writing. However, they also know what utility function and naming conventions are being used and how your authentication works. When you ask them to add a feature, they can modify multiple files and folders to achieve this.

- Agentic AI: These tools go a step further. Apart from suggesting lines, Claude Code, Kiro AI (Amazon), GitHub Copilot, Traycer AI, and Blackbox AI also plan for your next features, break them down into steps, work on each step autonomously, and connect them later by executing code changes across multiple files.

Code Review and Quality

You can’t always rely on human reviewers. That’s why AI code review agents have become an essential part of the development tools stack.

These tools serve as the first line of defense before testing. They provide useful feedback, speed up the process, and reduce manual developers' workload, which allows them to focus on critical issues. Tools like CodeRabbit and Qodo are used as part of the modern stack and provide review on every PR. SonarQube or similar tools are used for security and quality gates, while some teams use built-in code review features of Cursor and Copilot.

Generally speaking, AI can catch roughly 80% of bugs or issues, while humans can focus on architectural correctness and business logic.

QA and Testing

You are automating code generation and code review process, so why are you still manually writing test scripts for your app features? The solution lies in AI-based testing tools. However, not all AI testing is created equal. You need tools that go beyond test scripting, such as agents that learn your entire app, understand how everything works, and generate tests based on actual user-behavior patterns.

QA.tech is a prime example of an AI-based agent that learns your app to find bugs autonomously. It scans the app, builds a knowledge graph (agent’s memory), understands how the app works, and generates tests. It also lets you integrate your GitHub repository to test every PR you push and allows testing via chat (something many tools lack).

Other tools in this space take different approaches. Qodex uses an agentic method for API testing and security, while testRigor generates autonomous tests that adapt as your UI changes, automatically fixing broken tests without human intervention.

Deployment and Monitoring (Observability)

Observability is considered to be the key future technology, especially as AI and agentic development take center stage. In a modern dev tool stack, AI-powered observability doesn’t just wake you up at 2 a.m. with an alert. It wakes you up with the solution.

Modern platforms like Datadog now include AI features like LLM observability. These provide end-to-end tracing across different AI agents with metrics like latency, token usage, and logs. Vercel offers built-in AI that has the ability to explain why monolith builds fail or provide insights into what has changed between builds.

I’m not suggesting that you need every observability feature under the sun. Most teams do just fine with basics. However, it does seem beneficial to invest in some level of AI functionality since it transforms observability from reactive “this is what’s broken, and I need to figure it out" into proactive "this is what’s broken, here’s why it happened, and here are some potential fixes.”

Why AI Testing Is Not Optional in 2026

Even though AI code generation tools can sometimes produce unexpected results or small errors in logic that compile successfully but won't work in practice, they are generally fast. It’s the “after coding phase,” or verification, where teams often get stuck.

Manual testing slows this process down, which is why modern AI QA fits right in the middle of your pipeline, between rapid code generation and stable deployment. AI-powered testing will help you maintain efficiency throughout development. Plus, with AI agents running continuously on your app, you will also gain confidence to ship features faster.

Building Your 2026 AI Tool Stack the Right Way

Here’s my take on building the perfect 2026 modern AI tool stack:

- Don’t replace everything at once. Find your biggest pain point, and start working on it. Alternatively, if your critical flows are already covered by existing tests, start with coverage gaps, and let AI agents explore and test around them.

- Switching to AI-based IDEs should be your next step. Migrating to Cursor or Windsurf will get you immediate context gains. You’ll find that your teams are more productive and deliver better results.

- Automating PR reviews in your workflow should be another key focus. You can achieve this by installing GitHub Apps like CodeRabbit or Qodo for code review and integrating QA.tech to automatically test every PR before it merges. This is a low-effort, high-reward change.

- Then, integrate AI testing tools like QA.tech or Qodex. Let them learn your app and generate tests autonomously.

The idea is to have a balanced bundle of tools. Keep your existing processes, slowly add AI agents to handle repetitive tasks, and you’ll be good to go!

Final Thoughts

Your pipeline is only as fast as its slowest step. And unsurprisingly, for most teams, that’s manual testing. Luckily, AI agents can handle your end-to-end testing for both existing and future products. In fact, our most successful customers have integrated QA.tech into their current workflows without waiting for a new project or a rewrite.

If you’re still manually testing, now is the right time to reconsider your strategy. The ROI is immediate, and the impact on your release speed is dramatic.

Ready to see how AI testing fits into your stack? Book a demo call with QA.tech, and our team will show you how AI agents can handle your E2E testing automatically.

Stay in touch for developer articles, AI news, release notes, and behind-the-scenes stories.