Is QA Automation Worth It? The Real ROI of Intelligent Testing

Imagine this scenario: your CFO looks up from the spreadsheet and says, “Another QA tool? We already have testing covered, don’t we?” You’ve probably been in that budget meeting, and, if you’re being honest, you’ll admit it’s a fair question, especially considering the context. The tech sector has seen significant layoffs, and companies are increasingly pushing development teams to work on quality. Still, even though they are highly skilled at building software, QA is a whole new ball game.

Now, QA budgets may be frozen, but investments in tools, especially AI-driven ones, could still be on the table. And that’s where intelligent testing comes into play.

I understand if you’re a bit skeptical. A lot of QA automation ends up being fragile, and teams quickly find themselves maintaining tests instead of shipping quality. But AI-driven testing changes the equation. QA engineers are not writing scripts anymore; they are guiding an agent that understands how the application works. Moving away from rigid scripts to more adaptive testing is what finally delivers the boost in ROI.

How QA Teams Underestimate the Hidden Costs of Manual QA

In a budget meeting, QA costs are usually calculated the same way. Employee salaries, tools, and maybe some infrastructure are taken into account, but what is often missed is the opportunity cost.

The opportunity cost refers to what your team isn’t building. If an engineer works on debugging checkout flows for an ecommerce app for hours, those same hours are not spent architecting a better payment system. Similarly, if your QA lead is running the same test suite for the tenth time this month, they are now wasting their time on button clicks instead of contributing to the strategic business aims.

In these circumstances, there is no benefit to hiring more testers. It will just create linear growth and higher costs without a corresponding increase in speed or output.

Take Pricer, a retail tech company, as an example. They were growing fast and adding new features weekly, but their QA couldn’t keep up. They needed to test more features, yet they were not in the position to hire more testers. Every sprint came down to two choices: either ship faster with a risk, or test thoroughly and miss deadlines.

This tension of having to choose between shipping quickly and testing properly is a challenge most engineering teams are facing today.

What Bugs Actually Cost

There’s a classic technique in software engineering known as the 100x multiplier. It will help you calculate the cost of bugs in a manual QA process, and it goes like this:

- A bug found in development costs $1x to fix;

- A bug found in QA/staging costs $10x to fix;

- A bug found in production or deployment would cost $100x, but by then, it’s too late.

The problem here isn’t just fixing the bug, that’s easy to do. The deployment delay multiplier is the main issue here. A critical bug that requires a rollback on a Friday afternoon stops your momentum. Perhaps it even causes you to blow your budget because now, the marketing campaign you’ve been working on is delayed or the release has to be pushed.

And then there’s churn. As you’re probably aware of, many modern users do not report bugs. They simply exit and never return. If a user hits a broken signup flow in Safari, that’s not a Jira ticket waiting to be filed, it’s immediate revenue loss.

In today’s market, teams have to ship faster to be competitive, but not at the cost of breaking things and losing revenue. Manual QA can’t keep pace, and traditional test automation is brittle. Something has got to change.

Traditional Automation: Why It Doesn’t Provide a Good ROI

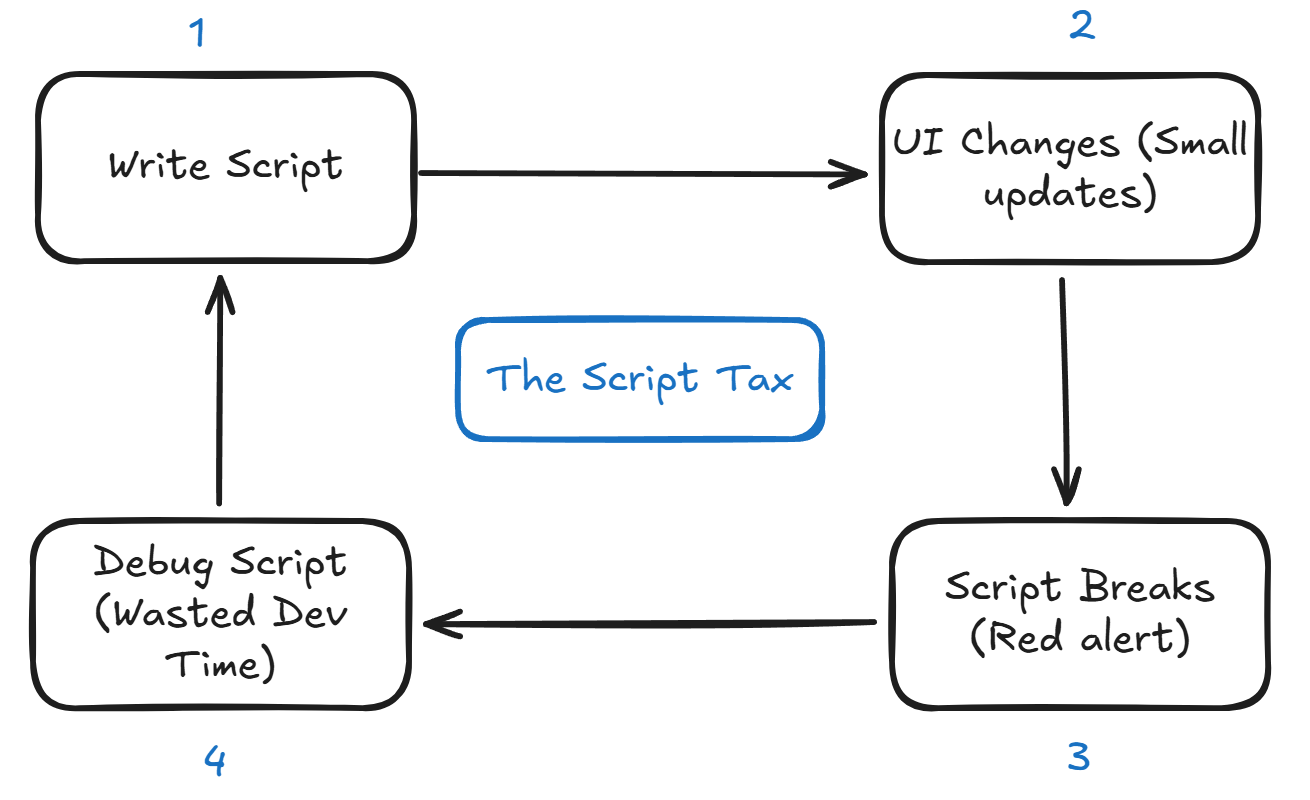

Traditional automation promised to solve the problem of manual QA, but it didn’t. We were sold the idea of “write once, run forever,” but what we actually got was “write once, debug multiple times.” Traditional scripts are too brittle. They depend on CSS classes, selectors, XPath, and more. As such, they represent a maintenance burden on the QA team and sometimes on developers, too.

You’ve invested in an automation tool to save time, but you’re now paying additional costs (the script tax) to hire a dedicated engineer who can keep tests from breaking.

And then there’s the famous “AI Wrapper” trap. Basically, many tools claim to use AI to automate your QA, but it turns out they are only using it to write test scripts for you. Don’t fall for it. It’s like relying on Playwright LLM to generate a test script, just for it to still end up rigid.

How Intelligent Agents Fix the Maintenance Trap

The return on investment (ROI) from AI testing is significantly higher than what traditional automation can offer. What once took hours of scripting now takes a single prompt, and results come back in minutes. Instead of maintaining heavy, brittle scripts, your team can now focus on writing prompts.

We’re also witnessing a shift from scripted automation to agentic testing. Tools like QA.tech understand your app better and create tests that you may not have even thought of. This is not like Playwright, where you have to define and memorize CSS selectors. They actually grasp the user intent. Plus, they self-heal, which is something QA automation has traditionally lacked.

Remember Pricer? By investing in agentic testing, they didn’t just solve their immediate challenges. They were able to scale without hiring additional QA engineers. The result was a clear increase in ROI and elimination of the ongoing overhead of manual and automation maintenance.

Do We Need a POC, and What Should We Measure?

At this point, your procurement team may ask, “Do they offer a POC? How can we be sure this will work for us?” The answer is yes, QA.tech does provide a guided proof of concept (POC) so you can validate agentic testing in your own environment.

Here are the four criteria you should focus on:

- First, track the time spent setting up traditional automation and running regression tests, then compare it with what the AI agent handles automatically. Be honest, as the gap you get directly reflects your ROI.

- Second, measure test coverage by comparing the number of tests executed per week before and after introducing the AI agent. The coverage should increase with AI.

- Third, compare the bugs caught using traditional automation with those identified by the AI agent before production. Keep in mind that the goal isn’t perfection, but shifting left.

- Finally, remember that you need to provide good input like clear prompts, credentials, test data, objectives, and more because AI agents learn your app over time. No need to worry, though, you’ll start seeing working tests within days.

When Automation Isn’t Worth It

Before you start implementing AI, you need to understand when traditional automation is sufficient and when intelligent testing may be the better choice.

If you’re a pre-product-market-fit startup and your app changes every week, it may be too early for automated testing. Your development speed is too fast, and even intelligent testing won't be able to keep up, so you’ll likely end up with bugs. Wait until everything stabilizes.

For companies that have very simple workflows or single-page apps, manual testing may still suffice. If deployments are quarterly or the flow is basic, traditional automation might not even add much value.

However, intelligent testing may still provide some benefits.

The real value from automation appears in the critical path (where you generate revenue on your platform). You want to ensure that every time someone logs in, checks out, is onboarded, or searches for something, they can navigate through those experiences without fail. However, you’ll still need human judgment to evaluate UX and explore edge cases (different paths a user might take) in order to determine if a feature will actually resolve the user's problem.

Conclusion

The goal of intelligent testing isn’t to replace human testers. It’s to provide them with the essential AI tools to increase their efficiency and speed. You don’t want your engineers wasting their time debugging button clicks. Instead, you want them exploring the cases, simulating malicious users, and spotting “vibes” that are off.

Intelligent agents take care of repetitive tasks like regression testing and sanity checks. This allows your team to reinvest their time and resources in building, coding, and testing.

And if the CFO asks about the cost of these tools and services, the answer is simple: it’s an investment in a testing assistant that will help your team ship products quickly instead of spending time on manual processes and testing.

Schedule a call with us today to see how easily these agents can learn your application today!

Stay in touch for developer articles, AI news, release notes, and behind-the-scenes stories.